“If only our company knew what our company knows” has been a longstanding lament for leaders: organizations are prevented from mobilizing their knowledge and capabilities towards their strategic priorities. Similarly, being able to locate knowledge gaps in the organization, whether we were initially aware of them (known unknowns), or initially unaware of them (unknown unknowns), represents opportunities to gain new capabilities, mitigate risks, and navigate the ever-accelerating business landscape more nimbly.

AI implementations are already showing signs of knowledge gaps: hallucinations, wrong answers, incomplete answers, and even “unanswerable” questions. There are multiple causes for AI hallucinations, but an important one is not having the right knowledge to answer a question in the first place. While LLMs may have been trained on massive amounts of data, it doesn’t mean that they know your business, your people, or your customers. This is a common problem when organizations make the leap from how they experience “Public AI” tools like ChatGPT, Gemini, or Copilot, to attempting their own organization’s AI solutions. LLMs and agentic solutions need knowledge—your organization’s unique knowledge— to produce results that are unique to your and your customers’ needs, and help employees navigate and solve challenges they encounter in their day-to-day work.

In a recent article, EK outlined key strategies for preparing content and data for AI. This blog post builds on that foundation by providing a step-by-step process for identifying and closing knowledge gaps, ensuring a more robust AI implementation.

The Importance of Bridging Knowledge Gaps for AI Readiness

EK lays out a six-step path to getting your content, data, and other knowledge assets AI-ready, yielding assets that are correct, complete, consistent, contextual, and compliant. The diagram below provides an overview of these six steps:

Identifying and filling knowledge gaps, the third step of EK’s path towards AI readiness, is crucial in ensuring that AI solutions have optimized inputs.

Prior to filling gaps, an organization will have defined its critical knowledge assets and conducted a content cleanup. A content cleanup not only ensures the correctness and reliability of the knowledge assets, but also reveals the specific topics, concepts, or capabilities that the organization cannot currently supply to AI solutions as inputs.

This scenario presupposes that the organization has a clear idea of the AI use cases and purposes for its knowledge assets. Given the organization knows the questions AI needs to answer, an assessment to identify the location and state of knowledge assets can be targeted based on the inputs required. This assessment would be followed by efforts to collect the identified knowledge and optimize it for AI solutions.

A second, more complicated, scenario arises when an organization hasn’t formulated a prioritized list of questions for AI to answer. The previously described approach, relying on drawing up a traditional knowledge inventory will face setbacks because it may prove difficult to scale, and won’t always uncover the insights we need for AI readiness. Knowledge inventories may help us understand our known unknowns, but they will not be helpful in revealing our unknown unknowns.

Identifying the Gap

How can we identify something that is missing? At this juncture, organizations will need to leverage analytics, introduce semantics, and if AI is already deployed in the organization, then use it as a resource as well. There are different techniques to identify these gaps, depending on whether your organization has already deployed an AI solution or is ramping up for one. Available options include:

Before and After AI Deployment

Leveraging Analytics from Existing Systems

Monitoring and assessing different tools’ analytics is an established practice to understand user behavior. In this instance, EK applies these same methods to understand critical questions about the availability of knowledge assets. We are particularly interested in analytics that reveal answers to the following questions:

- When are our people giving up when navigating different sections of a tool or portal?

- What sort of queries return no results?

- What queries are more likely to get abandoned?

- What sort of content gets poor reviews, and by whom?

- What sort of material gets no engagement? What did the user do or search for before getting to it?

These questions aim to identify instances of users trying, and failing, to get knowledge they need to do their work. Where appropriate, these questions can also be posed directly to users via surveys or focus groups to get a more rounded perspective.

Semantics

Semantics involve modeling an organization’s knowledge landscape with taxonomies and ontologies. When taxonomies and ontologies have been properly designed, updated, and consistently applied to knowledge, they are invaluable as part of wider knowledge mapping efforts. In particular, semantic models can be used as an exemplar of what should be there, and can then be compared with what is actually present, thus revealing what is missing.

We recently worked with a professional association within the medical field, helping them define a semantic model for their expansive amount of content, and then defining an automated approach to tagging these knowledge assets. As part of the design process, EK taxonomists interviewed experts across all of the association’s organizational functional teams to define the terms that should be present in the organization’s knowledge assets. After the first few rounds of auto-tagging, we examined the taxonomy’s coverage, and found that a significant fraction of the terms in the taxonomy went unused. We validated our findings with our clients’ experts, and, to their surprise, our engagement revealed an imbalance of knowledge asset production: while some topics were covered by their content, others were entirely lacking.

Valid taxonomy terms or ontology concepts for which few to no knowledge assets exist reveal a knowledge gap where AI is likely to struggle.

After AI Deployment

User Engagement & Feedback

To ensure a solution can scale, evolve, and remain effective over time, it is important to establish formal feedback mechanisms for users to engage with system owners and governance bodies on an ongoing basis. Ideally, users should have a frictionless way to report an unsatisfactory answer immediately after they receive it, whether it is because the answer is incomplete or just plain wrong. A thumbs-up or thumbs-down icon has traditionally been used to solicit this kind of feedback, but organizations should also consider dedicated chat channels, conversations within forums, or other approaches for communicating feedback to which their users are accustomed.

AI Design and Governance

Out-of-the-box, pre-trained language models are designed to prioritize providing a fluid response, often leading them to confidently generate answers even when their underlying knowledge is uncertain or incomplete. This core behavior increases the risk of delivering wrong information to users. However, this flaw can be preempted by thoughtful design in enterprise AI solutions: the key is to transform them from a simple answer generator into a sophisticated instrument that can also detect knowledge gaps. Enterprise AI solutions can be engineered to proactively identify questions which they do not have adequate information to answer and immediately flag these requests. This approach effectively creates a mandate for AI governance bodies to capture the needed knowledge.

AI can move beyond just alerting the relevant teams about missing knowledge. As we will soon discuss, AI holds additional capabilities to close knowledge gaps by inferring new insights from disparate, already-known information, and connecting users directly with relevant human experts. This allows enterprise AI to not only identify knowledge voids, but also begin the process of bridging them.

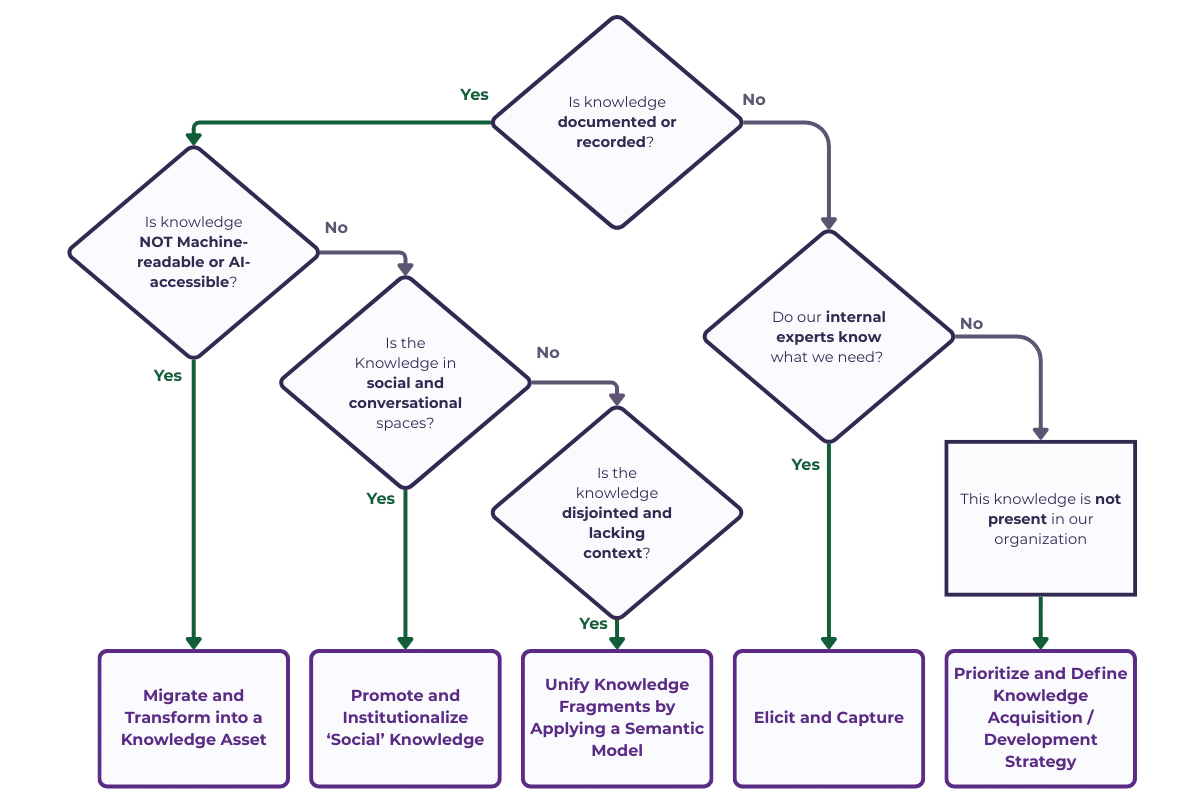

Closing the Gap

It is important, at this point, to make the distinction between knowledge that is truly missing from the organization and knowledge that is simply unavailable to the organization’s AI solution. The approach to close the knowledge gap will hinge on this key distinction.

If the ‘missing’ knowledge is documented or recorded somewhere… but the knowledge is not in a format that AI can use it, then:

Transform and migrate the present knowledge asset into a format that AI can more readily ingest.

| How this looks in practice:

A professional services firm had a database of meeting recordings meant for knowledge-sharing and disseminating lessons learned. The firm determined that there is a lot of knowledge “in the rough” that AI could incorporate into existing policies and procedures, but this was impossible to do by ingesting content in video format. EK engineers programmatically transcribed the videos, and then transformed the text into a machine-readable format. To make it truly AI-ready, we leveraged Natural Language Processing (NLP) and Named Entity Recognition (NER) techniques to contextualize the new knowledge assets by associating them with other concepts across the organization. |

If the ‘missing’ knowledge is documented or recorded somewhere… but the knowledge exists in private spaces like email or closed forums, then:

Establish workflows and guidelines to promote, elevate, and institutionalize knowledge that had been previously informal.

| How this looks in practice:

A government agency established online Communities of Practice (CoPs) to transfer and disseminate critical knowledge on key subject areas. Community members shared emerging practices and jointly solved problems. Community managers were able to ‘graduate’ informal conversations and documents into formal agency resources that lived within a designated repository, fully tagged, and actively managed. These validated and enhanced knowledge assets became more valuable and reliable for AI solutions to ingest. |

If the ‘missing’ knowledge is documented or recorded somewhere… but the knowledge exists in different fragments across disjointed repositories, then:

Unify the disparate fragments of knowledge by designing and applying a semantic model to associate and contextualize them.

| How this looks in practice:

A Sovereign Wealth Fund (SWF) collected a significant amount of knowledge about its investments, business partners, markets, and people, but kept this information fragmented and scattered across multiple repositories and databases. EK designed a semantic layer (composed of a taxonomy, ontology, and a knowledge graph) to act as a ‘single view of truth’. EK helped the organization define its key knowledge assets, like investments, relationships, and people, and weaved together data points, documents, and other digital resources to provide a 360-degree view of each of them. We furthermore established an entitlements framework to ensure that every attribute of every entity could be adequately protected and surfaced only to the right end-user. This single view of truth became a foundational element in the organization’s path to AI deployment—it now has complete, trusted, and protected data that can be retrieved, processed, and surfaced to the user as part of solution responses. |

If the ‘missing’ knowledge is not recorded anywhere… but the company’s experts hold this knowledge with them, then:

Choose the appropriate techniques to elicit knowledge from experts during high-value moments of knowledge capture. It is important to note that we can begin incorporating agentic solutions to help the organization capture institutional knowledge, especially when agents can know or infer expertise held by the organization’s people.

| How this looks in practice:

Following a critical system failure, a large financial institution recognized an urgent need to capture the institutional knowledge held by its retiring senior experts. To address this challenge, they partnered with EK, who developed an AI-powered agent to conduct asynchronous interviews. This agent was designed to collect and synthesize knowledge from departing experts and managers by opening a chat with each individual and asking questions until the defined success criteria were met. This method allowed interviewees to contribute their knowledge at their convenience, ensuring a repeatable and efficient process for capturing critical information before the experts left the organization. |

If the ‘missing’ knowledge is not recorded anywhere… and the knowledge cannot be found, then:

Make sure to clearly define the knowledge gap and its impact on the AI solution as it supports the business. When it has substantial effects on the solution’s ability to provide critical responses, then it will be up to subject matter experts within the organization to devise a strategy to create, acquire, and institutionalize the missing knowledge.

| How this looks in practice:

A leading construction firm needed to develop its knowledge and practices to be able to keep up with contracts won for a new type of project. Its inability to quickly scale institutional knowledge jeopardized its capacity to deliver, putting a significant amount of revenue at risk. EK guided the organization in establishing CoPs to encourage the development of repeatable processes, new guidance, and reusable artifacts. In subsequent steps, the firm could extract knowledge from conversations happening within the community and ingest them into AI solutions, along with novel knowledge assets the community developed. |

Conclusion

Identifying and closing knowledge gaps is no small feat, and predicting knowledge needs was nearly impossible before the advent of AI. Now, AI acts as both a driver and a solution, helping modern enterprises maintain their competitive edge.

Whether your critical knowledge is in people’s heads or buried in documents, Enterprise Knowledge can help. We’ll show you how to capture, connect, and leverage your company’s knowledge assets to their full potential to solve complex problems and obtain the results you expect out of your AI investments. Contact us today to learn how to bridge your knowledge gaps with AI.