In an era where natural language processing (NLP) tools are becoming increasingly sophisticated and accessible, many look to automate text-related processes such as recognition, summarization, and generation to save crucial time and effort. Currently, both machine learning (ML) models and large language models (LLMs) are being used extensively for NLP. Choosing a model to use is dependent on various factors depending on client needs and consultant team capabilities. Summarization through machine learning has come a long way throughout the years, and is now an extremely viable and attractive option for those looking to automate natural language processing.

In this blog, I will dive into the history of NLP and compare and contrast LLMs, machine learning models, and summarization methods. Additionally, I will speak to a government project where a government agency tasked EK with summarizing thousands of text responses to a survey. Speaking to the following summarization methods and considerations in the blog, I will then explain EK’s choice between traditional machine learning methods for NLP and LLMs for this project and considerations to keep in mind when deciding on a summarization method for certain use cases, including when sensitive data is involved.

The History of Natural Language Processing

Natural language processing has been a relevant concept in computing since the days of Alan Turing, who defined the well-known Turing test in his famous 1950 article, “Computing Machinery and Intelligence.” The test was designed to measure a computer’s ability to impersonate a human in a real-time written conversation, such that a human would be unable to distinguish whether or not they were speaking with another human or a computer; over 70 years later, computers are still advancing to reach that point.

In 1954, the first successful attempt at an implementation of NLP was conducted by Georgetown University and IBM, where a computer used punch card code to automatically translate a batch of more than 60 Russian sentences into English. While this was an extremely controlled experiment, in the 1960s, ELIZA, one of the first “chatterbots,” was able to parse users’ sentences and output sensical and contextually appropriate sentences. However, ELIZA used pattern matching and substitution to appear like it understood prompts, as it was unable to truly understand them and provided canned responses to prompts that were unusual or nonstandard.

In the following two decades, NLP models mainly consisted of hand-written rulesets that machines relied on to understand input and produce relevant output, which were quite effortful for computer scientists to implement. Throughout the 1990s and 2000s, these were soon replaced with statistical models with the advent and propagation of machine learning and hardware that could support more complex computing. These statistical models were much more powerful and able to engage with and manipulate more data, but introduced more ambiguity due to the lack of concrete rules. Starting with machine translation models that learned how to translate text based on bilingual sets of the same text and then began using statistical machine translation, machines began to develop deeper text understanding, processing, and generation skills.

The most recent iterations of NLP have been based on transformer machine learning models, allowing for deep learning and domain-specific training, so that NLP can be customized more easily to a client use case. These attention mechanism-based models were first proposed as an initial method for modern artificial intelligence use cases in 2017, when eight computer scientists working at Google wrote the paper “Attention Is All You Need,” publicizing the transformer architecture for the first time, which has been used in models such as OpenAI’s ChatGPT and other large language models to great success. These models were the starting point for Generative AI, which for the first time allows computers to synthesize new content, rather than simply classifying, summarizing, or otherwise modifying existing content. Today, these models have taken the field of machine learning by storm, and have led to the current “AI boom,” or “AI spring.”

Abstractive vs. Extractive Summarization

There are two key types of NLP summarization techniques: extractive summarization and abstractive summarization. Understanding these methods and the models that employ them is essential for selecting the right tool for your text summarization needs. Let’s delve deeper into each type, explore the models used, and use a running example to illustrate how they work.

Extractive summarization involves selecting significant sentences or phrases directly from the source text to form a summary. The model ranks sentences based on predefined criteria, such as keyword frequency or sentence position, and then extracts the top-ranking sentences without modifying them. For an example, consider the following text:

“The rapid advancement of artificial intelligence (AI) is reshaping industries globally. Businesses are leveraging AI to optimize operations, enhance customer experiences, and drive innovation. However, the integration of AI technologies comes with challenges, including ethical considerations and the need for substantial investment.”

An extractive summarization model might produce the following summary:

“The rapid advancement of AI is reshaping industries globally. Businesses are leveraging AI to optimize operations. The integration of AI technologies comes with challenges, including ethical considerations.”

For most of the history of NLP, models have been extractive – two examples are the Natural Language Tool Kit (NLTK) and the Bidirectional Encoder Representations from Transformers (BERT) model, arguably one of the most advanced extractive models. NLTK is a more basic model that relies on frequency analysis and position-based ranking to identify key words to extract into sentences. While NLTK provides a straightforward approach, its summaries may lack coherence if the extracted sentences don’t flow naturally when combined. BERT’s ability to grasp nuanced meanings makes it more effective than basic frequency-based methods, but it still relies on extracting existing sentences.

Abstractive summarization generates new sentences that capture the essence of the source text, potentially using words and phrases not found in the original content. This approach mimics human summarization by paraphrasing and condensing information.

Using the same original text, an abstractive summarization model might produce:

“AI is rapidly transforming global industries by optimizing business operations and enhancing customer experiences. Despite its benefits, adopting AI presents ethical challenges and requires significant investment.”

In this summary, the model has rephrased the content, combining ideas from multiple sentences into a coherent and concise overview. The models used for abstractive summarization might be a little more familiar to you.

An example of an abstractive model is the Bidirectional and Auto-Regressive Transformer (BART) model, which is trained on a large dataset of text and, once given a prompt, creates a summary of the prompt using words and phrases outside of the input. BART is a sequence-to-sequence model that combines the bidirectional encoder of BERT with a decoder similar to GPT’s autoregressive models. BART is trained by corrupting text (e.g., removing or scrambling words) and learning to reconstruct the original text. This denoising process enables it to generate coherent and contextually relevant summaries. It excels at tasks requiring the generation of new text, making it suitable for abstractive summarization. BART effectively bridges the gap between extractive models like BERT and fully generative models, providing more natural summaries.

LLMs also perform abstractive summarization, as they “fill in the blanks” based on massive sets of training data. While LLMs provide the most comprehensive and elaborate human-like summaries, they are prone to “hallucinations,” where they output unrelated or nonsensical text. Furthermore, there are other concerns with using LLMs in an enterprise setting such as privacy and security, which should be considered when working with sensitive data.

Functional Use of LLMs for Summarization

Recently, a large government agency presented EK with a request to conduct and analyze a survey with the goal of gauging employee sentiment on the current state of their data landscape, in order to understand how to improve their data management processes organization-wide. This survey involved data from over 1,200 employees nationwide, and employed the use of multiple-choice questions, “select all that apply” questions, and most notably, 41 free-response questions. While free-response questions allow respondents to provide a much deeper level of thought and insight into a topic or issue, they can present issues when attempting to gauge a sentiment or identify a consensus among answers. To address this, EK created a plan of how best to summarize numerous, varied text responses without expending manual effort in reading thousands of lines of text. This led to the consideration of both machine learning models and LLMs which can capably perform summarization tasks, saving consultants time and effort best spent elsewhere.

EK prepared to analyze the survey results from this project by seeking to extract meaningful summaries of more than simply a list of words or a key quote – to deliver sentences and paragraphs that captured the sentiments of a survey question’s responses, capturing respondents’ multiple emotions or points of view. For this purpose, extractive summarization model was not a good fit – even with stopwords removed, NLTK did not provide enough keywords to provide a complete description of what respondents indicated in their responses, and BERT’s extractive approach could not accurately synthesize coherent responses from answers that varied from sentence to sentence. As such, EK found that abstractive summarization tools were more suitable for this survey analysis. Abstractive summarization allowed us to gather sentiment from multiple viewpoints without “chopping up” the text directly. This allowed us to create a polished and readable final product that was more than a set of quotations.

One key issue in our use case was that LLMs hosted by a provider through the Internet are prone to data leaks and unwanted data retention, where sensitive information becomes part of the LLM’s training set. A data breach affecting one of these provider/host companies can jeopardize proprietary client information, release sensitive personal information, completely upend months of hard work, and even expose companies to legal liability.

To securely automate the sentiment analysis of our client’s data, EK used Ollama, an API that allows for various LLMs to be downloaded locally behind a firewall and run using the computer’s CPU/GPU processing power. Ollama features a large selection of LLMs to choose from, including the latest model from Meta AI, Llama, which we chose to use for our project.

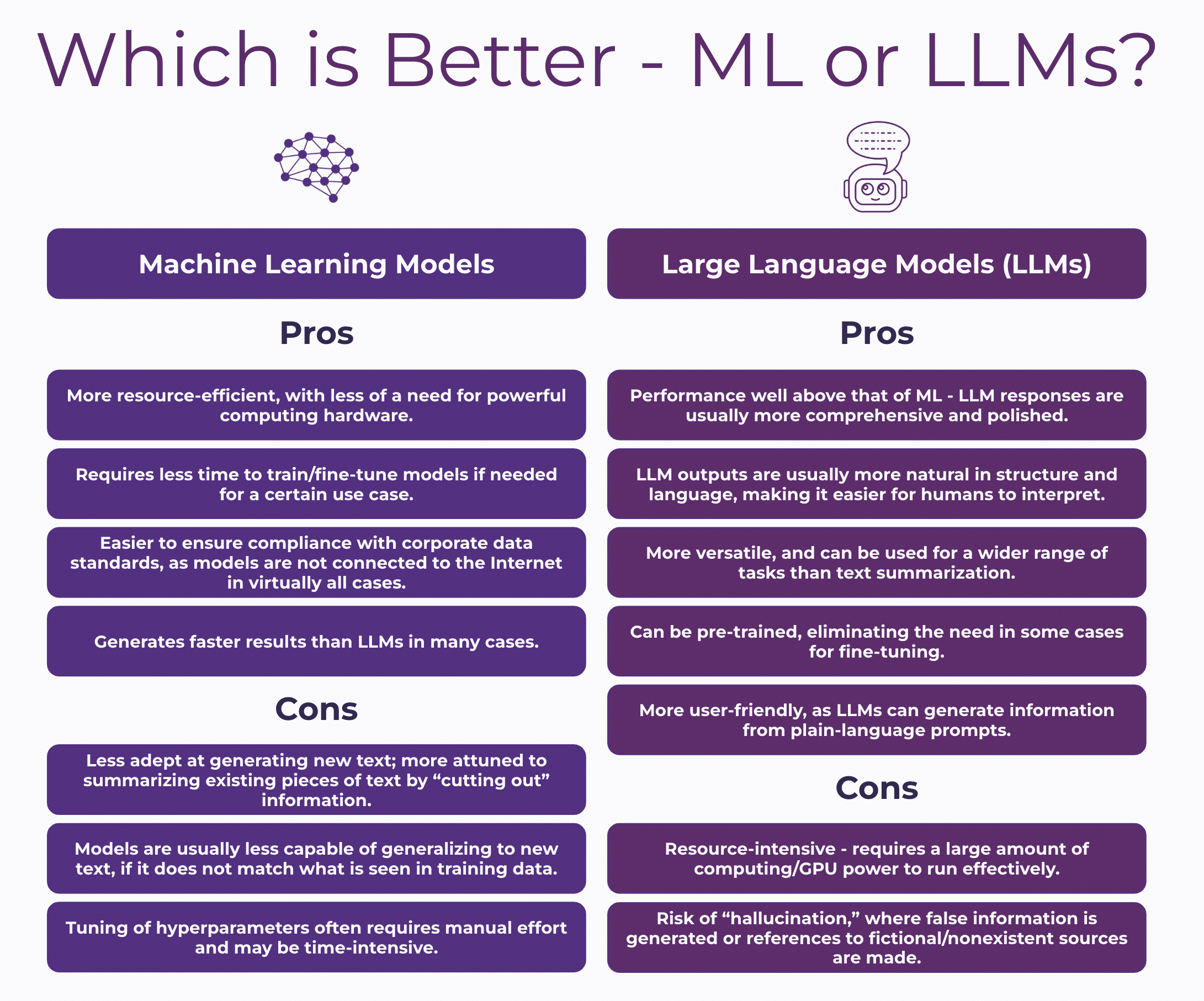

Based on this set of pros and cons and the context of this government project, EK chose LLMs for their superior ability at producing an output more similar to a final product and their ability to combine multiple similar viewpoints into one summary while being able to select the most common sentiments and present them as separate ideas.

Outcomes and Conclusion

Through this engagement with EK, the large federal agency received insights from the locally hosted instance of Llama that provided key stakeholders the information of over 1,200 respondents and their textual responses. Seeing these numerous survey answers over 41 free-response questions boiled down to key summaries and actionable insights allowed the agency to identify key areas of focus moving forward in their data management improvement efforts. Through the key areas of improvement identified through summarization, the agency was able to prioritize certain technical facets of their data landscape that were identified as must haves in future tooling solutions as well as areas for more immediate organizational change to garner organizational engagement and buy-in.

Free-text responses can be difficult to process and summarize, especially when filled with various distinct meanings and sentiments. While machine learning models excel at more basic sentiment and keyword analysis, the advanced language understanding power behind an LLM allows for coherent, nuanced, and comprehensive summaries to be formed, capturing multiple viewpoints and presenting them coherently. For this engagement, a locally hosted and secure LLM turned out to be the right choice, as EK was able to deliver survey results that were concise, accurate, and informative.

If you’re ready to unlock the full potential of advanced NLP tools—whether through traditional machine learning models or cutting-edge LLMs—Enterprise Knowledge can guide you every step of the way. Contact us at info@enterprise-knowledge.com to learn how we can help your organization streamline processes, gain actionable insights, and make more informed decisions faster!