Why do Large Language Models (LLMs) sometimes produce unexpected or inaccurate results, often referred to as ‘hallucinations’? What challenges do organizations face when attempting to align the capabilities of LLMs with their specific business contexts? These pressing questions underscore the complexities and potential problems of LLMs. Yet, the integration of LLMs with Knowledge Graphs (KGs) offers promising avenues to not only address these concerns but also to revolutionize the landscape of data processing and knowledge extraction. This paper delves into this innovative integration, exploring how it shapes the future of artificial intelligence (AI) and its real-world applications.

Introduction

Large Language Models (LLMs) have been trained on diverse and extensive datasets containing billions of words to understand, generate, and interact with human language in a way that is remarkably coherent and contextually relevant. Knowledge Graphs (KGs) are a structured form of information storage that utilizes a graph database format to connect entities and their relationships. KGs translate the relationships between various concepts into a mathematical and logical format that both humans and machines can interpret. The purpose of this paper is to explore the synergetic relationship between LLMs and KGs, showing how their integration can revolutionize data processing, knowledge extraction, and artificial intelligence (AI) capabilities. We explain the complexities of LLMs and KGs, showcase their strengths, and demonstrate how their combination can lead to more efficient and comprehensive knowledge processing and improved performance in AI applications.

Understanding Generative Large Language Models

LLMs can generate text that closely mimics human writing. They can compose essays, poems, and technical articles, and even simulate conversation in a remarkably human-like manner. LLMs use deep learning, specifically a form of neural network architecture known as transformers. This architecture allows the model to weigh the importance of different words in a sentence, leading to a better understanding of language context and syntax. One of the key strengths of LLMs is their ability to understand and respond to context within a conversation or a text. This makes them particularly effective for applications like chatbots, content creation, and language translation. However, despite the many capabilities of LLMs, they have limitations. They can generate incorrect or biased information, and their responses are influenced by the data they were trained on. Moreover, they do not possess true understanding or consciousness; they simply simulate this understanding based on patterns in data.

Exploring Knowledge Graphs

KGs are a powerful way to represent and store information in a structured format, making it easier for both humans and machines to access and understand complex datasets. They are used extensively in various domains, including search engines, recommendation systems, and data integration platforms. At their core, knowledge graphs are made up of entities (nodes) and relationships (edges) that connect these entities. This structure allows for the representation of complex relationships between different pieces of data in a way that is both visually intuitive and computationally efficient. KGs are often used to integrate structured and unstructured data from multiple sources. This integration provides a more comprehensive understanding of the data by providing a unified view. One of the strengths of KGs is the ease with which they can be queried. Technologies like SPARQL (a query language for graph databases) enable users to efficiently extract complex information from a knowledge graph. KGs find applications in various fields, including search engines (like Google’s Knowledge Graph), social networks, business intelligence, and artificial intelligence.

Enhancing Knowledge Graph Creation with LLMs

KGs make implicit human knowledge explicit and allow inferences to be drawn from the information they are provided. The ontology, or graph model, serves as anchors or constraints to these inferences. Once created and validated, KGs can be trusted as a source of truth, they make inferences based on the semantics and structure of their model (ontology). Because of this element of human intervention, humans can ensure that the interpretation of information is correct for the given context, in particular alleviating the ‘garbage in – garbage out’ phenomenon. However, because of this human intervention, they can also be fairly labor-intensive to create. KGs are created using one of a couple types of graph database frameworks, they are generally dependent on some form of human intervention and are generated by individuals with a specialized skill set and/or specialized software. To access the information in a Knowledge Graph they must be stored in an appropriate graph database platform and require the use of specialized query languages to query the graph. Because of these specialized skills and a high degree of human intervention, knowledge graphs can be time-consuming and labor-intensive to create.

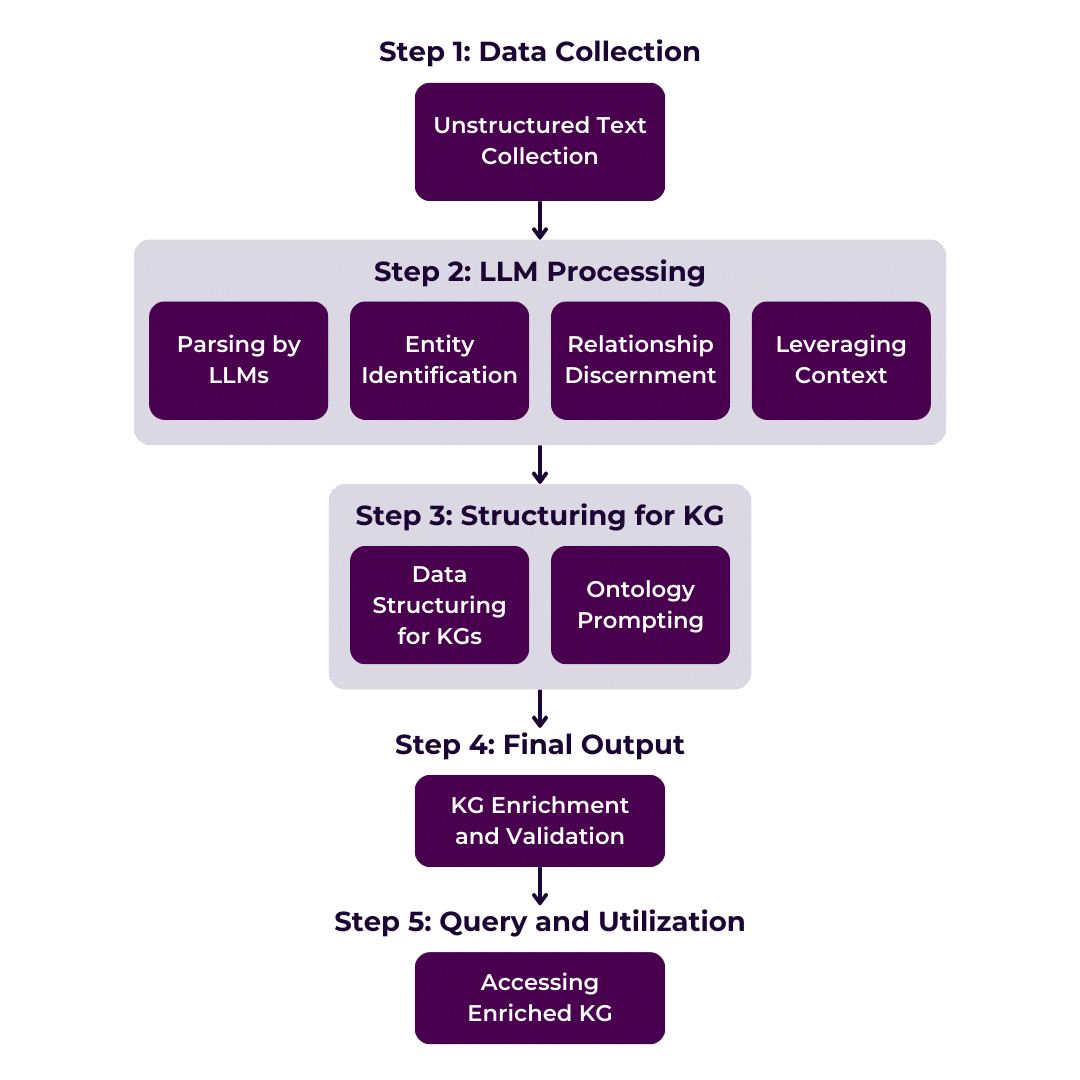

Enhancing KG Creation with LLMs through Ontology Prompting

Through a technique known as ontology prompting, LLMs can effectively parse through vast amounts of unstructured text, accurately identify and extract pertinent entities, and discern the intricate relationships between these entities. By understanding and leveraging the context in which data appears, these models are not only capable of recognizing diverse entity types (such as people, places, organizations, etc.) but can also delineate the nuanced relationships that connect these entities. This process significantly streamlines the creation and enrichment of KGs, transforming raw, unstructured data into a structured, interconnected web of knowledge that is both accessible and actionable. The integration of LLMs into KG construction not only enriches the data but also significantly augments the utility and accuracy of the knowledge graphs in various applications, ranging from semantic search and content recommendation to advanced analytics and decision-making support.

Improving LLM Performance with Knowledge Graphs

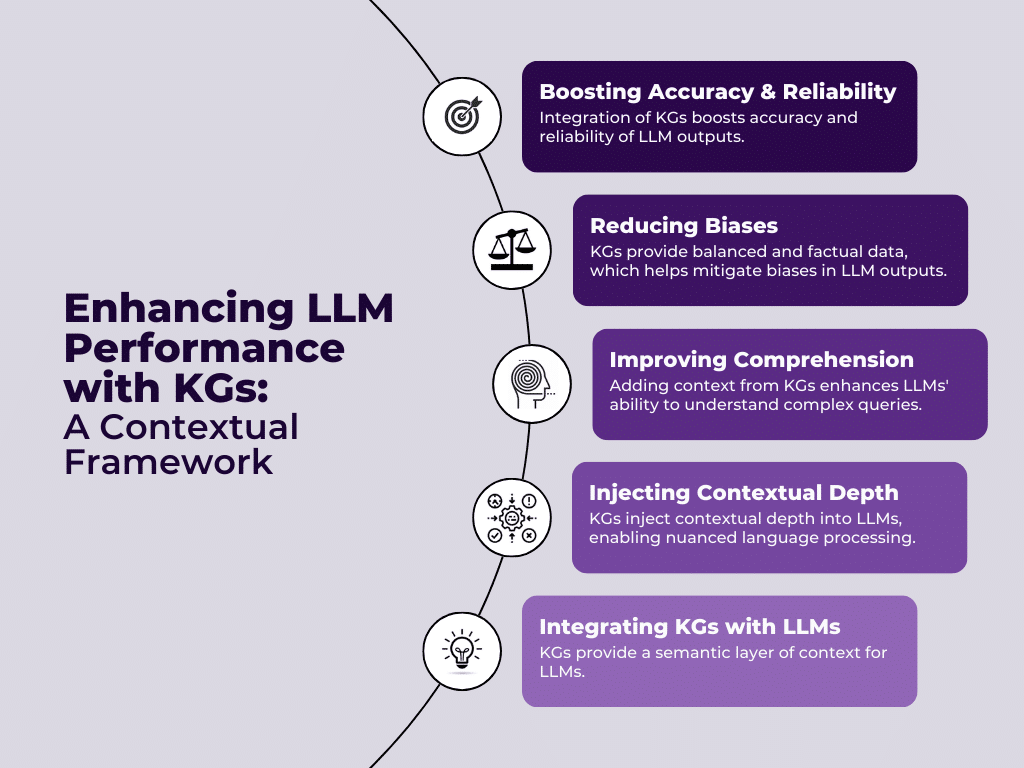

The integration of KGs into LLMs offers substantial performance improvements, particularly in enhancing contextual understanding, reducing biases, and boosting accuracy. KGs inject a semantic layer of contextual depth into LLMs, enabling these models to grasp and process language with a more nuanced understanding of the subject matter. This interaction significantly enhances the comprehension capabilities of LLMs, as they become more adept at interpreting and responding to complex queries with enhanced precision. Moreover, the structured nature of KGs aids in mitigating biases inherent in LLMs. By providing a balanced and factual representation of information, KGs help neutralize skewed perspectives and promote a more objective and informed generation of content. Finally, the incorporation of KGs into LLMs has been instrumental in enhancing the accuracy and reliability of the output generated by LLMs.

The validated data from KGs serve as a solid foundation, and reduce ambiguities and errors in the information processed by LLMs, thereby ensuring a higher quality of output that is trustworthy, traceable, and contextually coherent.

Case Studies and Applications

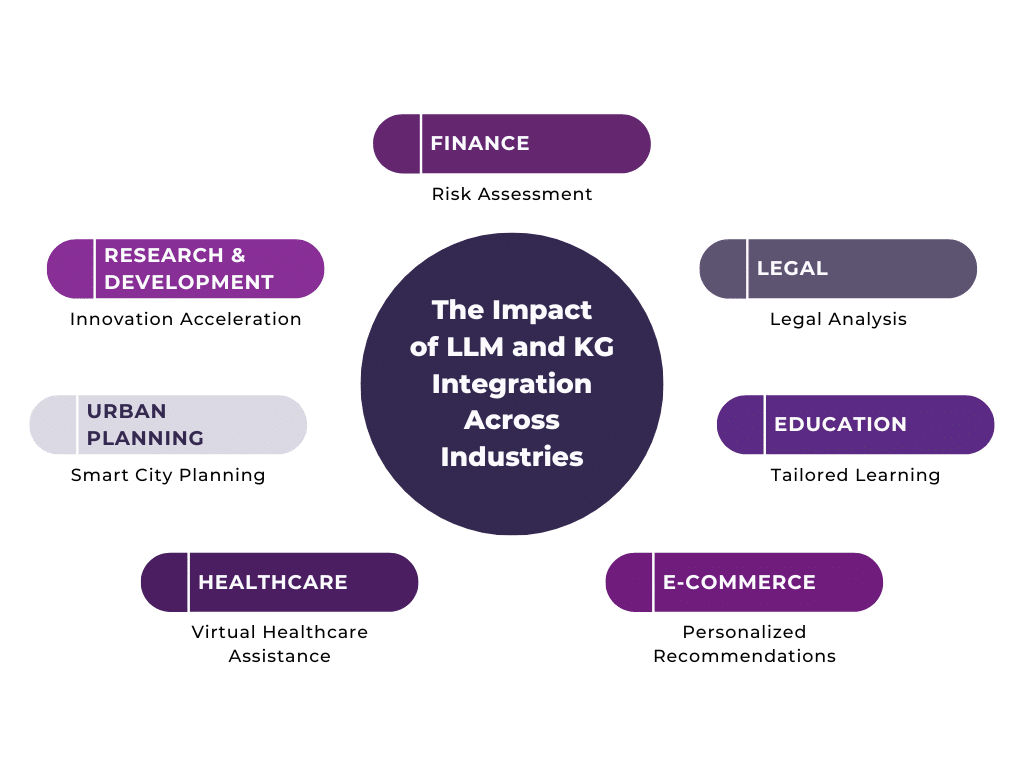

The integration of LLMs and KGs is making significant advances across various industries and transforming how we process and leverage information. For instance, in the finance sector, LLMs combined with KGs are used for risk assessment and fraud detection. These systems analyze transaction patterns, detect anomalies, and understand the relationships between different entities, helping financial institutions mitigate risks and prevent fraudulent activities. Another example is personalized recommendation systems. E-commerce platforms like Amazon utilize KGs and LLMs to understand customer preferences, search histories, and purchase behaviors. This integration allows for highly personalized product and content recommendations, improving customer experience and increasing sales and engagement. In the legal industry LLMs and KGs are used to analyze legal documents, case laws, and statutes. They help in summarizing legal documents, extracting relevant clauses, and conducting research, thereby saving time for legal professionals and improving the accuracy of legal advice. The potential of LLM and KG integrations is unlimited, promising transformative advancements across sectors. For example, leveraging LLMs and KGs can transform educational platforms, guiding learners through tailored and personalized educational journeys. In healthcare, the innovation in sophisticated virtual assistants is revolutionizing telemedicine, offering preventive care and preliminary diagnoses. Urban planning and management stand to gain immensely from this technology, enabling smarter city planning through the analysis of diverse data sources, from traffic patterns to social media sentiments. Moreover, the research and development are set to accelerate, with LLMs and KGs synergizing to automate literature reviews, foster novel research ideas, and predict experimental outcomes.

Challenges and Considerations

While the integration of LLMs and KGs is promising, it is accompanied by a set of significant challenges and considerations. From a technical perspective, merging LLMs with KGs needs sophisticated algorithms capable of handling the complexity of KG structures and the nuances of natural language processed by LLMs. For example, ensuring data compatibility, maintaining real-time data synchronization, and managing the computational load are difficult tasks that require advanced solutions and ongoing innovation. Moreover, ethical and privacy concerns are one of the top challenges of this integration. The use of LLMs and KGs involves processing vast amounts of data some of which may be sensitive or personal. Ensuring that these technologies adhere to privacy laws and regulations, maintain data confidentiality, and make ethically sound decisions is a continuous challenge. There’s also the risk of perpetuating biases present in the training data of LLM that require meticulous oversight and implementation of bias-mitigation strategies. Furthermore, the sustainability of these advanced technologies cannot be overlooked. The energy consumption associated with training and running large-scale LLMs and maintaining extensive KGs poses significant environmental concerns. As the demand for these technologies grows, finding ways to minimize their carbon footprint and developing more energy-efficient models is important. Addressing these technical, ethical, and sustainability challenges is crucial for the responsible and effective implementation of LLM and KG integrations.

Conclusion

In this white paper, we explored the dynamic interplay between LLMs and KGs, unraveling the profound impact of their integration on various industries. We delved into the transformative capabilities of LLMs in enhancing the creation and enrichment of KGs, highlighting automated data extraction, contextual understanding, and data enrichment. Conversely, we discussed how KGs can improve LLM performance by imparting contextual depth, mitigating biases, enabling source traceability, and increasing accuracy and reliability. We also showcased the practical benefits and revolutionary potential of this synergy. In conclusion, the combination of LLMs and KGs stands at the forefront of technological advancement and directs us toward an era of enhanced intelligence and informed decision-making. However, fostering continued research, encouraging interdisciplinary collaboration, and nurturing an ecosystem that prioritizes ethical considerations and sustainability is important.

Want to jumpstart your organization’s use of LLMs? Check out our Enterprise LLM Accelerator and contact us at info@enterprise-knowledge.com for more information!

About this article

This is an article within a linked series written to provide a straightforward introduction to getting started with language models (LLMs) and knowledge graphs (KGs). You can find the next article in the series here.