It’s been recognized for far too long that organizations spend as much as 30-40% of their time searching for or recreating information. Now, imagine a dedicated analyst who doesn’t just look for or analyze data for you but also roams the office, listens to conversations, reads emails, and proactively sends you updates while spotting outdated data, summarizing new information, flagging inconsistencies, and prompting follow-ups. That’s what an AI agent does; it autonomously monitors content and data platforms, collaboration tools like Slack, Teams, and even email, and suggests updates or actions—without waiting for instructions. Instead of sending you on a massive data hunt to answer “What’s the latest on this client?”, an AI agent autonomously pulls CRM notes, emails, contract changes, and summarizes them in Slack or Teams or publishes findings as a report. It doesn’t just react, it takes initiative.

The potential of AI agents for productivity gains within organizations is undeniable—and it’s no longer a distant future. However, the key question today is: when is the right time to build and deploy an AI agent, and when is simpler automation the more effective choice?

While the idea of a fully autonomous assistant handling routine tasks is appealing, AI agents require a complex framework to succeed. This includes breaking down silos, ensuring knowledge assets are AI-ready, and implementing guardrails to meet enterprise standards for accuracy, trust, performance, ethics, and security.

Over the past couple of years, we’ve worked closely with executives who are navigating what it truly means for their organizations to be “AI-ready” or “AI-powered”, and as AI technologies evolve, this challenge has only become more complex and urgent for all of us.

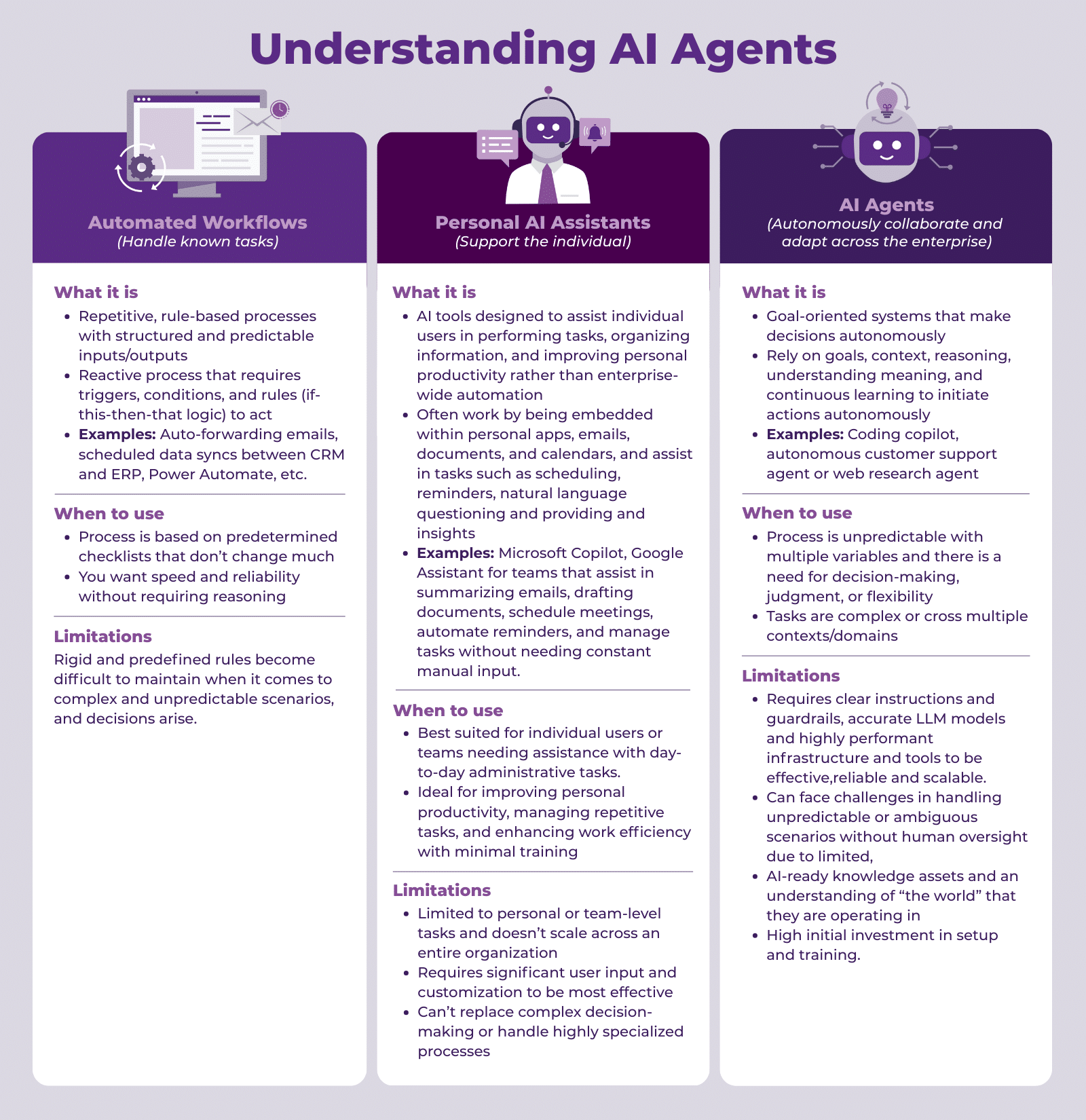

To move forward effectively, it’s crucial to understand the role of AI agents compared to traditional or narrow AI, automation, or augmentation solutions. Specifically, it is important to recognize the unique advantages of agent-based AI solutions, identify the right use cases, and ensure organizations have the best foundation to scale effectively.

In the first part of this two-part series, I’ll outline the core building blocks for organizations looking to integrate AI agents. The goal of this series is to provide insights that help set realistic expectations and contribute to informed decisions around AI agent integration—moving beyond technical experiments—to deliver meaningful outcomes and value to the organization.

Understanding AI Agents

AI agents are goal-oriented autonomous systems built from large language and other AI models, business logic, guardrails, and a supporting technology infrastructure needed to operate complex, resource-intensive tasks. Agents are designed to learn from data, adapt to different situations, and execute tasks autonomously. They understand natural language, take initiative, and act on behalf of humans and organizations across multiple tools and applications. Unlike traditional machine learning (ML) and AI automations (such as virtual assistants or recommendation engines), AI agents offer initiative, adaptability, and context-awareness by proactively accessing, analyzing, and acting on knowledge and data across systems.

Components of Agentic AI Framework

1. Relevant Language and AI Models

Language models are the agent’s cognitive core, essentially its “brain”, responsible for reasoning, planning, and decision-making. While not every AI agent requires a Large Language Model (LLM), most modern and effective agents rely on LLMs and reinforcement learning to evaluate strategies and select the best course of action. LLM-powered agents are especially adept at handling complex, dynamic, and ambiguous tasks that demand interpretation and autonomous decision-making.

Choosing the right language model also depends on the use case, task complexity, desired level of autonomy, and the organization’s technical environment. Some tasks are better served to remain simple, with more deterministic workflows or specialized algorithms. For example, an expertise-focused agent (e.g., a financial fraud detection agent) is more effective when developed with purpose-built algorithms than with a general-purpose LLM because the subject area requires hyper-specific, non-generalizable knowledge. On the other hand, well-defined, repetitive tasks, such as data sorting, form validation, or compliance checks, can be handled by rule-based agents or classical machine learning models, which are cheaper, faster, and more predictable. LLMs, meanwhile, add the most value in tasks that require flexible reasoning and adaptation, such as orchestrating integration with multiple tools, APIs, and databases to perform real-world actions like dynamic customer service process, placing trades or interpreting incomplete and ambiguous information. In practice, we are finding that a hybrid approach works best.

2. Semantic Layer and Unified Business Logic

AI agents need access to a shared, consistent view of enterprise data to avoid conflicting actions, poor decision-making, or the reinforcement of data silos. Increasingly, agents will also need to interact with external data and coordinate with other agents, which compounds the risk of misalignment, duplication, or even contradictory outcomes. This is where a semantic layer becomes critical. By standardizing definitions, relationships, and business context across knowledge and data sources, the semantic layer provides agents with a common language for interpreting and acting on information, connecting agents to a unified business logic. Across several recent projects, implementing a semantic layer has improved the accuracy and precision of initial AI results from around 50% to between 80% and 95%, depending on the use case.

The semantic layer includes metadata management, business glossaries, and taxonomy/ontology/graph data schemas that work together to provide a unified and contextualized view of data across typically siloed systems and business units, enabling agents to understand and reason about information within the enterprise context. These semantic models define the relationships between data entities and concepts, creating a structured representation of the business domain the agent is operating in. Semantic models form the foundation for understanding data and how it relates to the business. By incorporating two or more of these semantic model components, the semantic layer provides the foundation for building robust and effective agentic perception, cognition, action, and learning that can understand, reason, and act on org-specific business data. For any AI, but specifically for AI agents, a semantic layer is critical in providing access to:

- Organizational context and meaning to raw data to serve as a grounding ‘map’ for accurate interpretation and agent action;

- Standardized business terms that establish a consistent vocabulary for business metrics (e.g., defining “revenue” or “store performance” ), preventing confusion and ensuring the AI uses the same definitions as the business; and

- Explainability and trust through metadata and lineage to validate and track why agent recommendations are compliant and safe to adopt.

Overall, the semantic layer ensures that all agents are working from the same trusted source of truth, and enables them to exchange information coherently, align with organizational policies, and deliver reliable, explainable results at scale. Specifically, in a multi-agent system with multiple domain-specific agents, all agents may not work off the same semantic layer, but each will have the organizational business context to interpret messages from each other as courtesy of the domain-specific semantic layers.

The bottom line is that, without this reasoning layer, the “black box” nature of agents’ decision-making processes erodes trust, making it difficult for organizations to adopt and rely on these source systems.

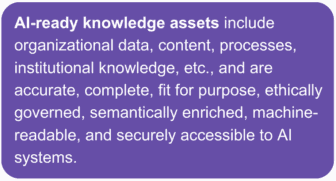

3. Access to AI-Ready Knowledge Assets and Sources

Agents require accurate, comprehensive, and context-rich organizational knowledge assets to make sound decisions. Without access to high-quality, well-structured data, agents, especially those powered by LLMs, struggle to understand complex tasks or reason effectively, often leading to unreliable or “hallucinated” outputs. In practice, this means organizations making strides with effective AI agents need to:

- Capture and codify expert knowledge in a machine-readable form that is readily interpretable by AI models so that tacit know-how, policies, and best practices are accessible to agents, not just locked in human workflows or static documents;

- Connect structured and unstructured data sources, from databases and transactional systems to documents, emails, and wikis, into a connected, searchable layer that agents can query and act upon;

- Provide semantically enriched assets with well-managed metadata, consistent labels, and standardized formats to make them interoperable with common AI platforms;

- Align and organize internal and external data so agents can seamlessly draw on employee-facing knowledge (policies, procedures, internal systems) as well as customer-facing assets (product documentation, FAQs, regulatory updates) while maintaining consistency, compliance, and brand integrity; and

- Enable access to AI assets and systems while maintaining strict controls over who can use it, how it is used, and where it flows.

This also means, beyond static access to knowledge, agents must also query and interact dynamically with various sources of data and content. Doing this includes connecting to applications, websites, content repositories, and data management systems, and taking direct actions, such as reading/writing into enterprise applications, updating records, or initiating workflows.

Enabling this capability requires a strong design and engineering foundation, allowing agents to integrate with external systems and services through standard APIs, operate within existing security protocols, and respect enterprise governance and record compliance requirements. A unified approach, bringing together disparate data sources into a connected layer (see semantic layer component above), helps break down silos and ensures agents can operate with a holistic, enterprise-wide view of knowledge.

4. Instructions, Guardrails, and Observability

Organizations are largely unprepared for agentic AI due to several factors: the steep leap from traditional, predictable AI to complex multi-agent orchestration, persistent governance gaps, a shortage of specialized expertise, integration challenges, and inconsistent data quality, to name a few. Most critically, the ability to effectively control and monitor agent autonomy remains a fundamental barrier—posing significant security, compliance, and privacy risks. Recent real-world cases highlight how quickly things can go wrong, including tales of agents deleting valuable data, offering illegal or unethical advice, and amplifying bias in hiring decisions or in public-sector deployments. These failures underscore the risks of granting autonomous AI agents high-level permissions over live production systems without robust oversight, guardrails, and fail-safes. Until these gaps are addressed, autonomy without accountability will remain one of the greatest barriers to enterprise readiness in the agentic AI era.

As such, for AI agents to operate effectively within the enterprise, they must be guided by clear instructions, protected by guardrails, and monitored through dedicated evaluation and observability frameworks.

- Instructions: Instructions define an AI agent’s purpose, goals, and persona. Agents don’t inherently understand how a specific business or organization operates. Instead, that knowledge comes from existing enterprise standards, such as process documentation, compliance policies, and operating models, which provide the foundational inputs for guiding agent behavior. LLMs can interpret these high-level standards and convert them into clear, step-by-step instructions, ensuring agents act in ways that align with organizational expectations. For example, in a marketing context, an LLM can take a general directive like, “All published content must reflect the brand voice and comply with regulatory guidelines”, and turn it into actionable instructions for a marketing agent. The agent can then assist the marketing team by reviewing a draft email campaign, identifying tone or compliance issues, and suggesting revisions to ensure the content meets both brand and regulatory standards.

- Guardrails: Guardrails are safety measures that act as the protective boundaries within which agents operate. Agents need guardrails across different functions to prevent them from producing harmful, biased, or inappropriate content and to enforce security and ethical standards. These include relevance and output validation guardrails, personally identifiable information (PII) filters that detect unsafe inputs or prevent leakage of PII, reputation and brand alignment checks, privacy and security guardrails that enforce authentication, authorization, and access controls to prevent unauthorized data exposure, and guardrails against prompt attacks and content filters for harmful topics.

- Observability: Even with strong instructions and guardrails, agents must be monitored in real time to ensure they behave as expected. Observability includes logging actions, tracking decision paths, monitoring model outputs, cost monitoring and performance optimization, and surfacing anomalies for human review. A good starting point for managing agent access is mapping operational and security risks for specific use cases and leveraging unified entitlements (identity and access control across systems) to apply strict role-based permissions and extend existing data security measures to cover agent workflows.

Together, instructions, guardrails, and observability form a governance layer that ensures agents operate not only autonomously, but also responsibly and in alignment with organizational goals. To achieve this, it is critical to plan for and invest in AI management platforms and services that define agent workflows, orchestrate these interactions, and supervise AI agents. Key capabilities to look for in an AI management platform include:

- Prompt chaining where the output of one LLM call feeds the next, enabling multi-step reasoning;

- Instruction pipelines to standardize and manage how agents are guided;

- Agent orchestration frameworks for coordinating multiple agents across complex tasks; and

- Evaluation and observability (E&O) monitoring solutions that offer features like content and topic moderation, PII detection and redaction, and protection against prompt injection or “jailbreaking” attacks. Furthermore, because training models involve iterative experimentation, tuning, and distributed computation, it is paramount to have benchmarks and business objectives defined from the onset in order to optimize model performance through evaluation and validation.

In contrast to the predictable expenses of standard software, AI project costs are highly dynamic and often underestimated during initial planning. Many organizations are grappling with unexpected AI cost overruns due to hidden expenses in data management, infrastructure, and maintenance for AI. This can severely impact budgets, especially for agentic environments. Tracking system utilization, scaling resources dynamically, and implementing automated provisioning allows organizations to maintain consistent performance and optimization for agent workloads, even under variable demand, while managing cost spikes and avoiding any surprises.

Many traditional enterprise observability tools are now extending their capabilities to support AI-specific monitoring. Lifecycle management tools such as MLflow, Azure ML, Vertex AI, or Databricks help with the management of this process at enterprise scale by tracking model versions, automating retraining schedules, and managing deployments across environments. As with any new technology, the effective practice is to start with these existing solutions where possible, then close the gaps with agent-specific, fit-for-purpose tools to build a comprehensive oversight and governance framework.

5. Humans and Organizational Operating Models

There is no denying it—the integration of AI agents will transform ways of working worldwide. However, a significant gap still exists between the rapid adoption plans for AI agents and the reality on the ground. Why? Because too often, AI implementations are treated as technological experiments, with a focus on performance metrics or captivating demos. This approach frequently overlooks the critical human element needed for AI’s long-term success. Without a human-centered operating model, AI deployments continue to run the risk of being technologically impressive but practically unfit for organizational use.

Human Intervention and Human-In-the-Loop Validation: One of the most pressing considerations in integrating AI into business operations is the role of humans in overseeing, validating, and intervening in AI decisions. Agentic AI has the power to automate many tasks, but it still requires human oversight, particularly in high-risk or high-impact decisions. A transparent framework for when and how humans intervene is essential for mitigating these risks and ensuring AI complies with regulatory and organizational standards. Emerging practices we are seeing are showing early success when combining agent autonomy with human checkpoints, wherein subject matter experts (SMEs) are identified and designated as part of the “AI product team” from the onset to define the requirements for and ensure that AI agents consistently focus on and meet the right organizational use cases throughout development.

Shift in Roles and Reskilling: For AI to truly integrate into an organization’s workflow, a fundamental shift in the fabric of an organization’s roles and operating model is becoming necessary. Many roles as we know them today are shifting—even for the most seasoned software and ML engineers. Organizations are starting to rethink their structure to blend human expertise with agentic autonomy. This involves redesigning workflows to allow AI agents to automate routine tasks while humans focus on strategic, creative, and problem-solving roles.

Implementing and managing agentic AI requires specialized knowledge in areas such as AI model orchestration, agent–human interaction design, and AI operations. These skill sets are often underdeveloped in many organizations and, as a result, AI projects are failing to scale effectively. The gap isn’t just technical; it also includes a cultural shift toward understanding how AI agents generate results and the responsibility associated with their outputs. To bridge this gap, we are seeing organizations start to invest in restructuring data, AI, content, and knowledge operations/teams and reskilling their workforce in roles like AI product management, knowledge and semantic modeling, and AI policy and governance.

Ways of Working: To support agentic AI delivery at scale, it is becoming evident that agile methodologies must also evolve beyond their traditional scope of software engineering and adapt to the unique challenges posed by AI development lifecycles. Agentic AI, requires an agile framework that is flexible, experimental, and capable of iterative improvements. This further requires deep interdisciplinary collaboration across data scientists, AI engineers, software engineers, domain experts, and business stakeholders to navigate complex business and data environments.

Furthermore, traditional CI/CD pipelines, which focus on code deployment, need to be expanded to support continuous model training, testing, human intervention, and deployment. Integrating ML/AI Ops is critical for managing agent model drift and enabling autonomous updates. The successful development and large-scale adoption of agentic AI hinges on these evolving workflows that empower organizations to experiment, iterate, and adapt safely as both AI behaviors and business needs evolve.

Conclusion

Agentic AI will not succeed through technology advancements alone. Given the inherent complexity and autonomy of AI agents, it is essential to evaluate organizational readiness and conduct a thorough cost-benefit analysis when determining whether an agentic capability is essential or merely a nice-to-have.

Success will ultimately depend on more than just cutting-edge models and algorithms. It also requires dismantling artificial, system-imposed silos between business and technical teams, while treating organizational knowledge and people as critical assets in AI design. Therefore, a thoughtful evolution of the organizational operating model and the seamless integration of AI into the business’s core is critical. This involves selecting the right project management and delivery frameworks, acquiring the most suitable solutions, implementing foundational knowledge and data management and governance practices, and reskilling, attracting, hiring, and retaining individuals with the necessary skill sets. These considerations make up the core building blocks for organizations to begin integrating AI agents.

The good news is that when built on the right foundations, AI solutions can be reused across multiple use cases, bridge diverse data sources, transcend organizational silos, and continue delivering value beyond the initial hype.

Is your organization looking to evaluate AI readiness? How well does it measure up against these readiness factors? Explore our case studies and knowledge base on how other organizations are tackling this or get in touch to learn more about our approaches to content and data readiness for AI.