We’ve all sifted through dense technical documentation which has way too much detail about features and products that aren’t even relevant to us – just to get to that one nugget of information we’re actually looking for (often on page 245 of a 500-page product manual). If we deliver personalized content to our end users, we can solve this problem by only showing people the information that’s relevant to them.

However, delivering personalized content introduces a specific set of Quality Assurance (QA) considerations. Quality Assurance (QA) is defined as the maintenance of a desired level of quality in a service or product, especially by means of attention to every stage of the process of delivery or production. It is vital to the success of application development and product delivery that QA and User Acceptance Testing (UAT) are carried out as an ongoing part of the release cadence of an application. QA and UAT help eliminate accrued technical debt by raising any and all issues throughout development while ensuring the application is being built with personalization in mind through the continuous feedback loop of QA.

That said, there should be a clear set of standards in a QA test plan to capture and evaluate how personalization is implemented throughout development. In this blog, I’ll highlight some key tenets for a development team to follow to ensure personalization is continuously captured and reinforced through the thoughtful use of QA.

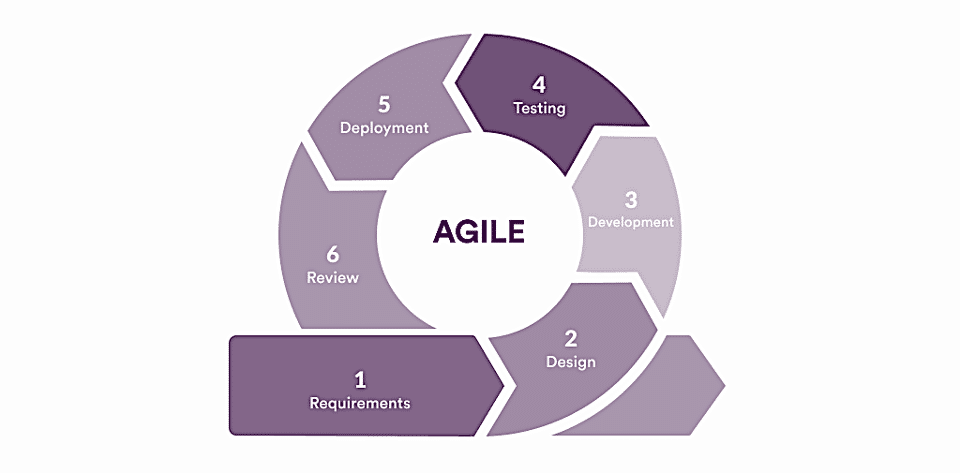

Agile Release Cadence and QA Scripting

When developing an application, releases are structured to follow a set cadence as defined by a release period. Each release will contain the features, patches, and other deliverables to the application developed by the team’s engineers during that release period. It is important to ensure proper UAT is carried out during each release period and accepted before the application is released to production. This starts with defining a QA script for users to follow when executing UAT and exhaustively testing the code contributions and their associated use cases within the application. Moreover, when personalization comes into play, criteria must be included in that UAT script to not only ensure code contributions are accepted but to validate that what’s delivered achieves personalization.

Criteria that evaluate personalization or personalized content delivery must be properly defined within these QA scripts and in a way that is easily executable by the users testing proposed changes to the application. These criteria should be translated from the business requirements and personalization goals the engagement tries to solve. For example, consider search engineering for a complex technical resource center for a large business with many departments. Without personalization, UAT could be passed by searching multiple facets and seeing results that are tagged or belong to said facets. With personalization in the QA script, UAT for those same search results is extended to cover things such as boosting results based on a user’s location and content findability based on a user’s role.

A successful development team knows the business requirements, personalization efforts, and use cases implemented by ongoing development. As a result, they will have an increased understanding of how to structure QA scripts so that proper evaluation of personalization is fully and accurately defined. In this way, the team will be able to better provide users with a testing plan to uncover more possibilities of bugs in the application, shortcomings of the feature(s) developed, and overall integrity of the application’s end-to-end functionality; all the while ensuring personalization is measurable and fully understood by the entire team. Uncovering and understanding these shortcomings early can eliminate technical debt by reducing the chance of discovering them after the application has gone live.

QA Processes and Execution

After QA scripts have been properly defined and distributed to users, it is time to carry out QA and ensure UAT is successful for the application to move to production.

As stated above, the development team has a much deeper understanding of the features proposed in the release, which could negatively impact the integrity of QA execution. An engineer, for example, executing QA scripts could produce a false sense of a feature being intuitive, given their foundational understanding of how it works. Instead, execution should be left to the users as they will offer the most critical and valuable feedback. There should also be a well-defined place for users to indicate a pass or fail status, along with a dedicated space for feedback and replication steps of any issue.

Suppose an engineer has developed a feature that maps content metadata to user roles after publishing the content. The code involved has been vigorously unit-tested and functionally tested by the engineer, giving them a biased lens of an intuitive feature because of their familiarity. A user will provide unbiased feedback on the feature, as they will be exposed to it with a fresh set of eyes. This will allow the user to evaluate the feature for accurate personalization, allowing for a continuous feedback loop of business goals being properly accomplished for the entirety of the QA process.

Meaningful Results and Feedback

Upon completion of QA and UAT, it is time to review the feedback that users left throughout their execution process. While it is imperative that analysts on the development team track, assess, and record feedback and success statuses of QA scripts, engineers should also be directly involved in this review process. Engineer involvement with a QA review is similar to user-driven story development. User-driven story development builds and estimates work from the viewpoint of those using the application. Engineer-driven QA review analyzes the work from the viewpoint of those familiar with the acceptance criteria of the work. As a result, engineer-driven QA increases the likelihood of an application’s success by fulfilling the needs of the use case, business goals, and quantifiable measurements of successful personalization.

Similarly, an engineer’s involvement in reviewing feedback and UAT results takes this principle further. By reviewing feedback, an engineer will have the opportunity to surface more thorough use cases given by actual users throughout the QA process.

Consider a subscription-based web form that allows users to subscribe themselves and their colleagues to content being delivered by an application. This feature has just been developed, and users have finished their acceptance testing. While nothing indicated a failure or blocker to move code into production, multiple users had left feedback asking for this web form to handle distribution lists and other types of pre-defined mailboxes. Since the engineers were heavily involved with the QA execution of this release, they can quickly refine and estimate new work so that it can be pulled into future sprints. Not only will this save planning time, but it also presents a learning opportunity for the engineers to better understand the process of user-driven development and business cases being solved by the application.

Conclusion

User Acceptance Testing and Quality Assurance plans are foundational to building and delivering an application that fully aligns with the business goals that drive personalization. It allows users to get the most out of the product and ensures the integrity of the application as a whole. This is especially important when considering how personalization affects improvement in content delivery. EK’s advanced content team has experience in a wide range of different areas regarding personalized content delivery. Through our thoughtful approach to QA, we ensure that no use case is left behind and the correct audiences are met with the correct content. Contact us if you see opportunities for personalization in your business!