At EK, we work with many organizations that are looking to connect, standardize, and enrich knowledge assets (both structured data and unstructured content) for their enterprise through the implementation of a Semantic Layer. While traditionally, Semantic Layer implementation was in large part driven by the need to provide high quality, trusted, and richly labeled data for analytics and business intelligence needs, AI has intensified this momentum. By connecting isolated information sources through a unified framework of taxonomies, metadata, ontologies and business glossaries, a Semantic Layer transforms data into actionable knowledge. It enables organizations to unlock the full value of their knowledge assets, ensuring that AI solutions deliver contextual, relevant, and accurate answers.

Despite having deep technical capabilities, many organizations are finding the transition from strategy to execution fraught with challenges, leading to initiatives that fizzle past their pilot stage, Proof of Concepts (PoCs) that fail to scale to enterprise-wide deployments, and AI efforts that fail to deliver on ROI.

In this blog, we will outline three common pain points in taking Semantic Layer strategies to implementation. We will provide our recommendations to avoid these pitfalls, leveraging real-world business cases to illustrate how organizations can achieve successful enterprise-wide deployment and realized ROI.

Pain Point 1: Failing to Build a Strong Business Case for Semantic Layers

| “We couldn’t find a strong enough narrative to why we needed semantics to support our work, preventing us from implementing our strategy.” |

We have encountered many teams with exceptional technical skills, and while they can easily explain the what and the how of a Semantic Layer, teams that deftly articulate the why of a Semantic Layer are less common. The challenge arises because these technologies are powerful and flexible, often enabling many capabilities, leading teams to assume the business value is obvious when it is not. This inability to define the strategic value is the actual business problem, as the Semantic Layer can feel conceptual and removed from everyday, tangible business pain points. Without a compelling answer to the why, the initiative will be starved of the executive buy-in and sustained investment necessary to move past small pilots to a successful, enterprise-wide implementation that delivers tangible ROI.

The value of semantics is most effectively conveyed when the ROI of a semantic solution is aligned to the organization’s top business priorities. Ideally, teams will articulate a clear vision and value proposition for their Semantic Layer when crafting an initial strategy. Product-based companies may care about sales uplifts, while financial firms may prioritize asset management metrics. However, refining and communicating this message is an iterative and ongoing process. If they have not done so already, semantic teams have a critical opportunity to find and refine a value proposition for the Semantic Layer as part of a pilot or Proof of Concept implementation. Articulating the value and purpose of semantics is crucial for moving into enterprise-wide implementation.

A Practical Example

We saw this challenge emerge when EK partnered with a large pharmaceutical company that aimed to develop and implement a semantic strategy for its data governance and AI teams. Early in the engagement, it became clear that in order for this project to be successful, educating and engaging organizational leadership on the value and use of semantic technologies would be critical to gaining buy-in.

Instead of rushing to explain the technical intricacies of an AI implementation to executives, the EK team aimed to understand from them priority business challenges currently facing the organization. Through our discovery process, the team identified frustration from executives around one question: “Why are we purchasing so much data?” Nobody seemed to have the answer. This resulted in the inability for purchasing teams to advise leadership on how to make decisions on its data acquisition. This was a costly and time sensitive issue, as executives feared they were overspending on third-party healthcare data and paying for the same or similar data products multiple times.

The EK team discovered that the organization’s purchasing teams, data teams, and requesting teams were not in regular communication, and did not have standard metadata practices for tagging their respective knowledge assets. This meant that critical information to answer this question was not surfaceable to their current processes. To articulate these findings to executives, the EK team developed a Proof of Concept, where the team used semantic tagging standards to surface duplicative qualities of data. To strengthen the business case, we calculated tangible ROI metrics for reducing the cost of purchased data, based on the Proof of Concept’s ability to identify duplicative data, reusable data products, and unused data. These calculated ROI metrics were presented alongside the pilot to the organization’s leadership.

By providing a relatable story tied to a tangible problem executives were grappling with, along with a roadmap for how to resolve this challenge through, suddenly “semantics” didn’t feel as far from their organization’s vocabulary. Semantics became tangible as metadata and tagging techniques enabled the organization to clearly identify data set duplicates and near duplicates. Semantics opened the path for the organization to take accurate inventories and avoid repeated data purchases.

Leaders now had a clear understanding of where their organization’s challenges were, aiding them to inform their next steps on how to find the answers they were asking to find. By telling similar stories for your organization, and tapping into the value proposition of semantics, you can avoid delays and roadblocks in efforts in taking your strategy forward to implementation.

Pain Point 2: Lack of a Cohesive Operating Model to Coordinate Teams

| “Our teams struggled to align on how best to collaborate in light of new AI implementation goals set by our organization.” |

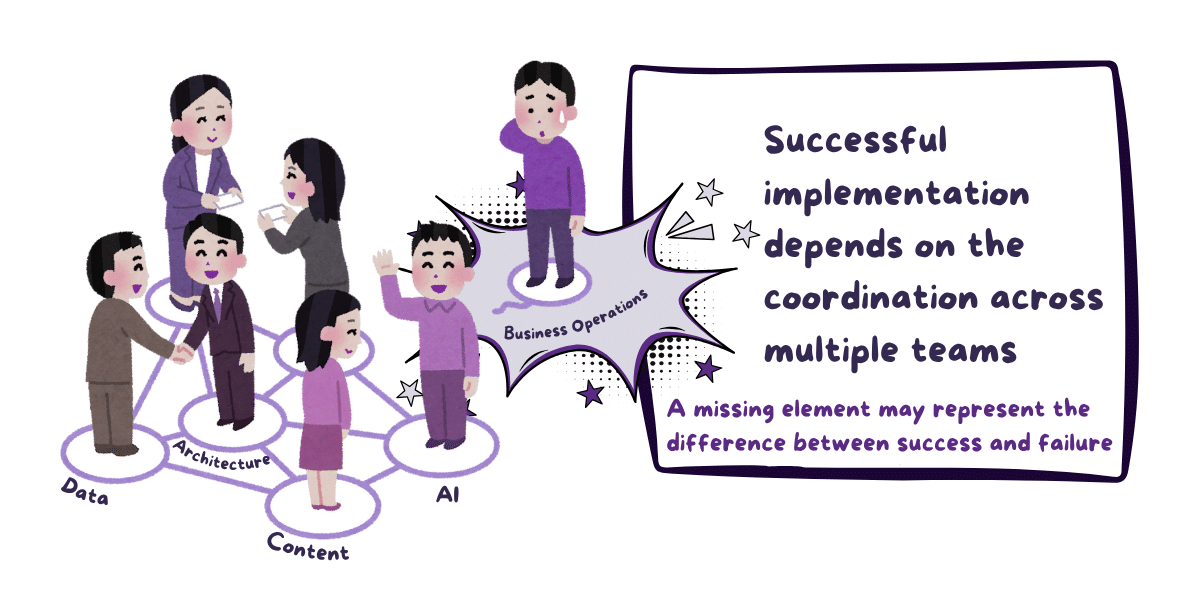

The Semantic Layer weaves together knowledge assets throughout the organization, and this implies that orchestrating the components necessary for its implementation requires traditionally siloed teams to work together. These include:

- The teams responsible for the sources of the knowledge assets to be ingested by the Semantic Layer (Data Lakes, relational databases, enterprise applications and systems, document management systems, and more);

- Teams responsible for consuming and presenting Semantic Layer outputs, including search, BI tools, and AI agents;

- Teams that manage the technical architecture that integrates the Semantic Layer to the rest of the technical landscape; and

- The teams that manage the individual components of the Semantic Layer itself, such as taxonomies, ontologies, knowledge graphs, and business glossaries.

Many of these teams typically worked in their own silos with little need to work with each other, where every team is in control of their own systems and with their own governance models. However, the implementation of a Semantic Layer, especially in the age of AI, requires much more coordination across teams. Once they begin to work with each other in pursuit of a Semantic Layer implementation, we see challenges arise due to a high level of ambiguity around roles and responsibilities, and a lack of communication regarding what teams need to do to be successful.

Without proper coordination, implementation efforts risk grinding down to a halt, or requiring significant rework to advance. We have seen multiple instances where different teams within an organization are trying to solve similar challenges, which leads to overlapping or competing efforts. Furthermore, teams have a difficult time assembling a comprehensive and cohesive view of their semantic solution, and consequently fail to provide a united front to decision-making executives. These challenges result in a failure to achieve enterprise-wide implementation. To facilitate success in their implementation efforts, teams should be:

- Aware of their role in contributing to semantic initiatives;

- Actively willing to adopt new ways of working; and

- Aware of how they stand to benefit from the change.

The need for a cohesive Operating Model has become apparent in these complex implementations due to their shared stakes: The Operating Model defines the different interaction points between teams and the rest of the organization, making coordination essential. By establishing repeatable processes for engaging diverse functions across the organization, an operating model drives coordination and consensus from the start. Furthermore, a cohesive operating model approach can also establish clear expectations for business stakeholders in their involvement in the development of semantic functionality, ensuring that teams utilize valuable business SME time efficiently.

A Practical Example

A recent example of this challenge arose from our work with an investment management organization, as they sought to advance their semantic capabilities in support of their AI implementation. The client’s goal was to enrich their knowledge assets with additional business context and improve their data labeling, while also making their AI outputs more traceable and explainable. Their teams were organized along traditional silos where knowledge, data, and content were managed separately. With emerging organizational AI initiatives, their 12+ supporting teams lacked the right approach to consistently deploy, monitor, evaluate, and continuously improve their AI solution. This was leading to frustration, misalignment, and duplicative efforts across teams when requesting inputs or feedback from business stakeholders.

As a part of the solution, the EK team evaluated the organization’s existing operating model and outlined the following improvements:

- Redefined roles and responsibilities for critical individuals and processes necessary for their AI initiatives across all 12+ teams, including defining new competencies for facilitating the delivery of semantic capabilities.

- Establishing a new organizational structure, considering a dedicated team responsible for the comprehensive implementation and governance of AI, and the addition of teams to manage and enhance specific semantic components such as taxonomies and ontologies.

- Rethinking a lifecycle for implementing AI and Semantic features, providing a clear and repeatable approach for designing, piloting, scaling and implementing new AI- and Semantic-based capabilities for the business.

- Identifying intervention points along the implementation lifecycle to define which teams and individuals need to coordinate to deliver tasks, providing clarity around accountability, and streamlining the way supporting teams engage business stakeholders.

The result of establishing the cohesive operating model was immediate and tangible: We equipped teams with clear, defined ways to collaborate, ensuring the collective effort better supported the organization’s overarching AI objectives. Importantly, this clarified not only the interaction points for all 12+ supporting teams but also ensured the teams dedicated to managing the core Semantic Layer components had a distinct, well-defined place within the new structure. Moreover, this alignment aims to streamline cross-team communication and their collaboration with business users. Ultimately, this shift ensures strategies move smoothly towards successful implementation, allowing teams to shake hands on meaningful progress instead of pointing fingers when complexity arises.

Pain Point 3: Failing to Ensure Knowledge Assets are Ready

| “We don’t feel ready for the technology recommendations given to us. The push for results is strong at my organization, and I am not sure if my content is ready to support the strategy my leadership wants to implement.” |

Regardless of the power and versatility of a Semantic Layer, organizations will be unable to realize its full benefits if it is not ingesting and enhancing the right knowledge assets in the first place. The enthusiasm and eagerness to adopt semantics, especially as they support AI, can lead to teams rushing to their implementation. Consequently, they find that advanced solutions that lack context-rich, accurate, complete, and consistent knowledge fail to deliver the expected outputs and insights. Instead, AI hallucinates, BI dashboards display outdated data, and search surfaces sensitive information to unauthorized users. Organizations will need to make their Knowledge Assets ready for the Semantic Layer.

So, what does ready mean? Ideally, Knowledge Assets will be correct, complete, consistent, contextual, and compliant. Depending on whether these knowledge assets are sourced from structured data or unstructured content, preparing them will follow a slightly different process, but in general, organizations will need to:

- Identify and define its prioritized knowledge assets.

- Ensure the quality of their existing knowledge assets.

- Capture tribal knowledge that may not be accessible to enterprise systems or machine-readable.

A Practical Example

EK worked with a large federal regulatory agency to build a Semantic Layer to enable AI use cases. Previous attempts had languished due to issues with content quality and content volume, leading to a lack of confidence in the implementation team. The agency felt like there was a lot of conversation in their organization around “AI”, with a keen interest in implementing solutions quickly – but they weren’t sure they trusted their team’s current knowledge assets to support the asks coming from their leadership. This left them frustrated, technology-adverse, and unsure of what their next steps were.

As part of a larger roadmap, the EK team identified a content audit as a critical first component in getting their knowledge assets ready. The objectives of this audit were to give the team insight into (1) what the full landscape of content looked like, (2) how much duplicative content existed, and (3) the degree to which content was complete and up-to-date to be reliably surfaced through AI solutions. By conducting a content audit, the team was positioned to identify the gaps in deploying an effective implementation roadmap to develop trustworthy AI solutions, and establish themselves as trusted partners in advancing their leadership’s strategic goals.

Final Remarks

Getting semantic implementations wrong can be costly, time-consuming, and frustrating. Moving from strategy to implementation of the Semantic Layer can be fraught with defending strategy value, team misalignment, and knowledge asset quality issues; however, this is not an untenable situation. Navigating this pivotal transition effectively requires focus on three critical components:

- Establishing a strong ROI story and business case that maps to industry and business priorities for sustaining engagement and buy-in;

- Designing an operating model to support coordination of teams; and

- Ensuring your knowledge assets are ready.

We have worked with organizations across industries to realize the potential of building a Semantic Layer to enable effective AI, advanced analytics, and other valuable use cases. Contact us for more information on establishing a pragmatic strategy for your Semantic Layer and smoothly transitioning to its implementation.