In the past year, many of the organizations EK has partnered with have been developing Large Language Model (LLM) based Proof-of-Concepts (PoCs). These projects are often pushed for by an enthusiastic IT Team, or internal initiative – with the low barrier to entry and cost in LLM development making it an easy project for executives to greenlight. Despite initial optimism, these LLM PoCs rarely reach the enterprise-grade implementations promised due to factors such as organizational buy-in, technical complexity, security concerns, misalignment on content readiness for AI solutions, and a lack of investment in key infrastructure. For example, Gartner has predicted that 30% of GenerativeAI projects will be abandoned after PoC by the end of 2025. This blog provides an overview of EK’s approach to evaluating and roadmapping an LLM solution from PoC to production, and highlights several dimensions important to successfully scaling an LLM-based enterprise solution.

Organizational Implementation Considerations:

Before starting on the technical journey from “RAGs to Riches”, there are several considerations for an organization before, during, and after creating a production solution. By taking into account each of these considerations, a production LLM solution has a much higher chance of success.

Before: Aligning Business Outcomes

Prior to building out a production LLM solution, a team will have developed a PoC LLM solution that is able to answer a limited set of use cases. Before the start of production development, it is imperative that business outcomes and the priorities of key stakeholders are aligned with project goals. This often looks like mapping business outcomes – such as enhanced customer interactions, operational efficiency, or reduced compliance risk to quantifiable outcomes such as shorter response times and findability of information. It is important to ensure these business goals translate from development to production and adoption by customers. Besides meeting technical functionality, setting up clear customer and organizational goals will help to ensure the production LLM solution continues to have organizational support throughout its entire lifecycle.

During: Training Talent and Proving Solutions

Building out a production LLM solution will require a team with specialized skills in natural language processing (NLP), prompt engineering, semantic integration, and embedding strategies. In addition, EK recommends investing resources into content strategists and SMEs who understand the state of their organization’s data and/or content. These roles in particular are critical to help prepare content for AI solutions, ensuring the LLM solution has comprehensive and semantically meaningful content. Organizations that EK has worked with have successfully launched and maintained production LLM solutions by proactively investing in these skills for organizational staff. This helps organizations build resilience in the overall solution, driving success in LLM solution development.

After: Infrastructure Planning and Roadmapping

To maintain a production LLM solution after it has been deployed to end-users, organizations must account for the infrastructure investments and operational costs needed, as well as necessary content and data maintenance. Some of these resources might include enterprise licensing, additional software infrastructure, and ongoing support costs. While many of these additional costs can be mitigated by effectively aligning business outcomes and training organizational talent, there still needs to be a roadmap and investment into the future infrastructure (both systematically and content-wise) of the LLM production solution.

Technical Criteria for Evaluating LLM PoCs:

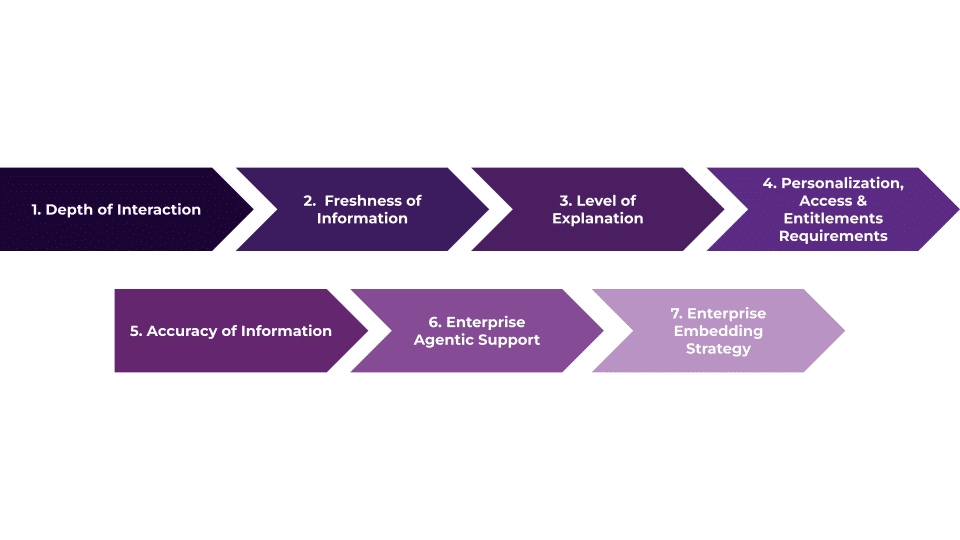

In parallel with the organizational implementation considerations, and from EK’s depth of experience in developing LLM MVPs, designing enterprise AI architecture, and implementing more advanced LLM solutions such as Semantic RAG, EK has developed 7 key dimensions that can be used to evaluate the effectiveness of an LLM PoC:

Figure 1: Dimensions for Evaluating an LLM Solution

1. Depth of Interaction: refers to how deeply and dynamically users can engage with the LLM solution. At a lower level, interaction might simply involve asking questions and receiving direct answers, while at the highest level, intelligent agents act on behalf of the user autonomously to leverage multiple tools and execute tasks.

2. Freshness of Information: describes how frequently the content and data behind the semantic search solution are updated and how quickly users receive these updates. While lower freshness implies data updated infrequently, at higher freshness levels, data is updated frequently or even continuously which helps to ensure users are always interacting with the most current, accurate, and updated information available.

3. Level of Explanation: refers to how transparently the LLM solution communicates the rationale behind its responses. At a lower level of explanation, users simply are receiving answers without clear reasoning. In contrast, a high level of explanation would include evidence, citations, audit trails, and a clear path on how information was retrieved.

4. Personalization, Access & Entitlements Requirements: describes how specifically content and data are tailored and made accessible based on user identity, roles, behavior, or needs. At lower levels, content is available to all users without personalization or adaptations. At higher levels, content personalization is integrated with user profiles, entitlements, and explicit access controls, ensuring users only see highly relevant, permissioned content.

5. Accuracy of Information: refers to how reliably and correctly the LLM solution can answer user queries. At lower levels, users receive reasonable answers that may have minor ambiguities or occasional inaccuracies. At the highest accuracy level, each response is traced back to original source materials and are cross-validated with authoritative sources.

6. Enterprise Agentic Support: describes how the LLM solution interacts with the broader enterprise AI ecosystem, and coordinates with other AI agents. At the lowest level, the solution acts independently without any coordination with external AI agents. At the highest level, the solution seamlessly integrates as a consumer and provider within an ecosystem of other intelligent agents.

7. Enterprise Embedding Strategy: refers to how the LLM solution converts information into vector representations (embeddings) to support retrieval. At a lower level embeddings are simple vector representations with minimal or no structured metadata. At the highest levels, embeddings include robust metadata and are integrated with enterprise context through semantic interpretation and ontology-based linkages.

For an organization, each of the technical criteria will be weighed differently based on the unique use cases and requirements of the LLM solution. For example, an organization that is working on a content generation use case could have a greater emphasis on Level of Explanation and Freshness of Information while an organization that is working on an information retrieval use case may care more about Personalization, Access, & Entitlements Requirements. This is an integral part of the evaluation process, with an organization coming to agreement on the level of proficiency needed within each factor. Leveraging this standard, EK has worked with organizations across various industries and diverse LLM use cases to optimize their solutions.

Additionally, EK recommends that an organization undergoing an LLM PoC evaluation also conduct an in-depth analysis of content relevant to their selected use case(s). This enables them to gain a more comprehensive understanding of its quality – including factors like completeness, relevancy, and currency – and can help unearth gaps in what the LLM may be able to answer. All of this informs the testing phase by guiding the creation of each test, as well as the expected outcomes, and can be generally categorized across three main areas of remediation:

- Content Quality – The content regarding a certain topic doesn’t explicitly exist and is not standardized – this may necessitate creating dummy data to enable certain types of tests.

- Content Structure – The way certain content is structured varies – we can likely posit that one particular structure will give more accurate results than another. This may necessitate creating headings to indicate clear hierarchy on pages, and templates to consistently structure content.

- Content Metadata – Contextual information that may be useful to users is missing from content. This may necessitate establishing a taxonomy to tag with a controlled vocabulary, or an ontology to establish relationships between concepts.

Technical Evaluation of LLM PoCs In Practice:

Putting the organizational implementation and technical considerations into practice, EK recently completed an engagement with a leading semiconductor manufacturer, employing the standard process for evaluating their PoC LLM search solution. The organization had developed a PoC search solution that was being utilized for answering questions against a series of user-selected PDFs relating to the company’s technical onboarding documentation. EK worked with the organization to align on key functional requirements via a capability assessment for a production LLM solution based on the 7 dimensions EK has identified. Additionally, EK completed a simultaneous analysis of in-scope content for the use case. The results of this content evaluation informed which content components should be prioritized and candidates for the testing plan.

After aligning on priority requirements, in this case, accuracy and freshness of information, EK developed and conducted a testing plan for parts of the PoC LLM. To operationalize the testing plan, EK created a four-phase RAG Evaluation & Optimization Workflow to turn the testing plan into actionable insights.This workflow helped produce a present-state snapshot of the LLM solution, a target-state benchmark, and a bridging roadmap that prioritizes retriever tuning, prompt adjustments, and content enrichment. Based on the workflow results, stakeholders at the organization were able to easily interpret how improved semantics, content quality, structure, and metadata would improve the results of their LLM search solution.

In the following blogs of the “RAGs to Riches” series, EK will be explaining the process for developing a capability assessment and testing plan for LLM based PoCs. These blogs will expand further on how each of the technical criteria can be measured as well as how to develop long-term strategy for production solutions.

Conclusion

Moving an LLM solution from proof-of-concept to enterprise production is no small feat. It requires careful attention to organizational alignment, strong business cases, technical planning, compliance readiness, content optimization, and a commitment to ongoing talent development. Addressing these dimensions systematically will ensure that your organization will be well positioned to turn AI innovation into a durable competitive advantage.

If you are interested in having EK evaluate your LLM-based solution, and help build out an enterprise-grade implementation contact us here.