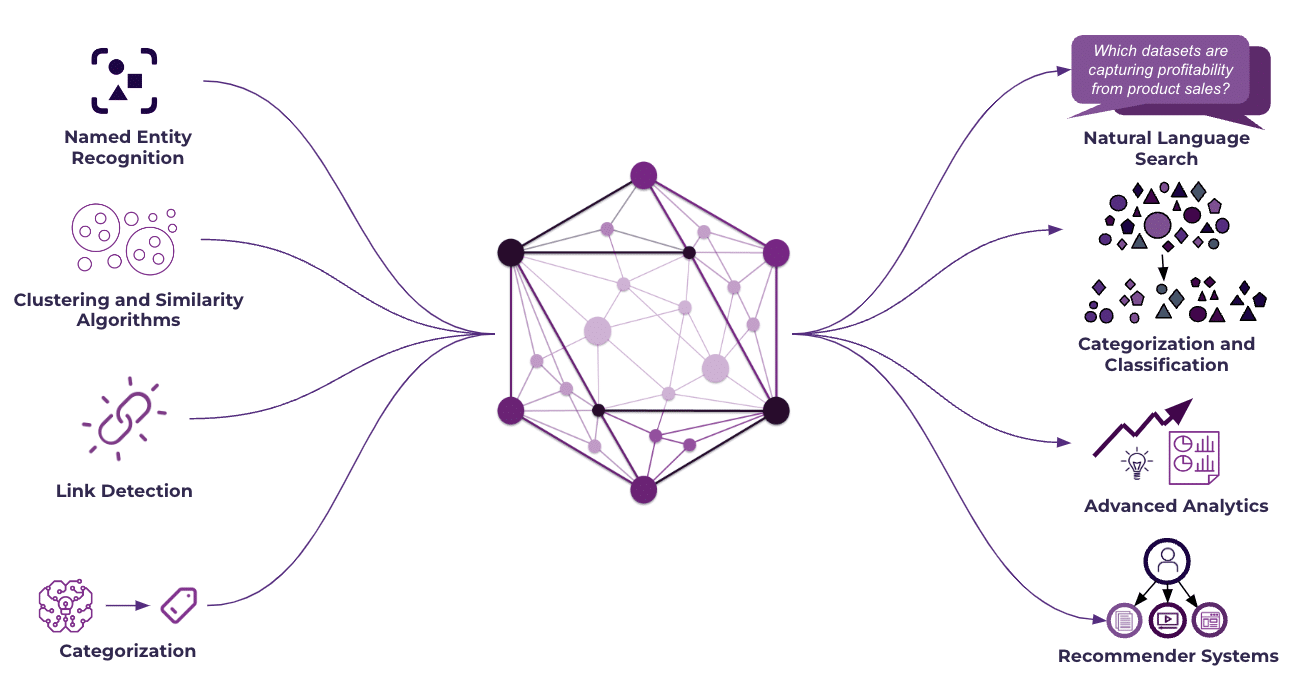

Two significant recent trends in knowledge management, artificial intelligence (AI) and the semantic layer, are reshaping the way organizations interact with their data and leverage it to derive actionable insights. Taking advantage of the interplay between AI and the semantic layer is key to driving advancements in data extraction, organization, interpretation, and application at the enterprise level. By integrating AI techniques with semantic components and understanding, EK is empowering our clients to break down data silos, connect vast amounts of their organizational data assets in all forms, and comprehensively transform their knowledge and information landscapes. In this blog, I will walk through how AI techniques such as named entity recognition, clustering and similarity algorithms, link detection, and categorization facilitate data curation for input into a semantic layer, which feeds into downstream applications for advanced search, classification, analytics, chatbots, and recommendation capabilities.

Understanding the Semantic Layer

The semantic layer is a standardized framework that serves as a bridge between raw data and user-facing applications by organizing, abstracting, and connecting data and knowledge from structured, unstructured, and semi-structured formats. It encompasses components such as taxonomies, ontologies, business glossaries, knowledge graphs, and related tooling to provide organizations with a unified and contextualized view of their data and information. This enables intuitive user interactions and empowers analysis and decision-making informed by business context.

AI Techniques in the Semantic Layer

The following AI techniques are useful tools for the curation of semantic layer input and powering downstream applications:

1. Named Entity Recognition

Named entity recognition (NER) is a natural language processing (NLP) technique that involves identifying and categorizing entities within text, such as people, organizations, or locations. By leveraging NER, organizations can automate the extraction process of common entities from large amounts of unstructured textual data to quickly identify key information. Identifying, extracting, and labeling common entities consistently across different datasets streamlines the normalization of data in varied formats from disparate sources for seamless data integration to the semantic layer. These enriched semantic representations of data enable organizations to connect information and surface contextual insights from vast amounts of complex data.

For a federally funded engineering research center, EK leveraged taxonomy and ontology models to automatically extract key entities from a vast repository of unstructured documents and add structured metadata, ultimately building an enterprise knowledge graph for the organization’s semantic layer. This supported a semantic search platform for users to conduct natural language searches and intuitively browse and navigate through documents by key entities such as person, project, and topic, reducing time spent searching from several days to 5 minutes.

2. Clustering and Similarity Algorithms

Clustering algorithms (e.g., K-means, DBSCAN) partition datasets by creating distinct groups of similar objects to identify patterns and find commonalities between unlabeled or uncategorized data elements, whereas similarity algorithms (e.g., cosine similarity, Euclidean distance, Jaccard similarity) are used to measure the similarity or dissimilarity between two objects or sets of objects. Clustering and similarity algorithms are crucial in various semantic layer-driven use cases and applications, such as chatbots, recommendation engines, and semantic search.

As part of a global financial institution’s semantic layer implementation for their risk management initiatives, EK led taxonomy development efforts by implementing a semi-supervised clustering algorithm to group a large volume of inconsistent and unwieldy free-text risk descriptions based on their semantic similarity. The firm’s subject matter experts used the results to identify common and relevant themes that informed the design of a standard risk taxonomy that will significantly streamline their risk identification and assessment processes.

Identifying groups and patterns within and across datasets is also beneficial for advanced analytics and reporting needs. EK leveraged these AI techniques for a biotechnology company to aggregate and normalize disparate data from multiple legacy systems, providing a full-scale view for comprehensive analysis. EK incorporated this data into the semantic layer and, in turn, automated the generation of regulatory reports and detailed process analytics.

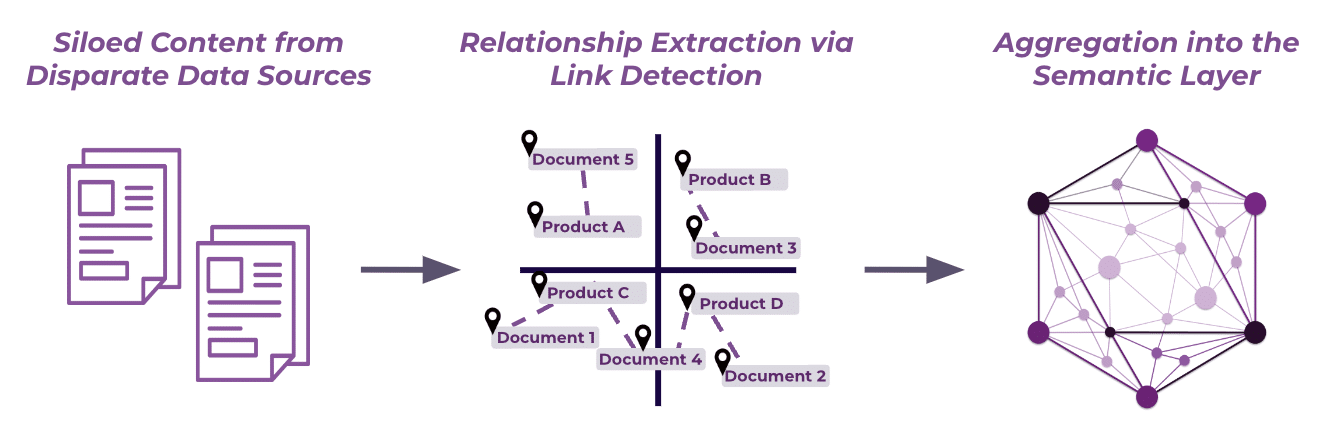

3. Link Detection

Link detection algorithms identify relationships and connections between entities or concepts within a dataset. Uncovering these links enables the construction of semantic networks or graphs, providing a structured representation of an organization’s knowledge domain. Link detection surfaces connections and patterns across data to enhance navigation and semantic search capabilities, ultimately facilitating comprehensive knowledge discovery and efficient information retrieval.

For a global scientific solutions and services provider, EK utilized link detection and prediction algorithms to develop a context-based recommendation system. The semantic layer established links between product data and product marketing content, expediting content aggregation and enabling personalization in the recommender interface for an intuitive and tailored user experience.

4. Categorization

Categorization involves automatically classifying data or text into predefined categories or topics based on their content or features. Auto-tagging and classification are powerful techniques to organize and typify content from multiple sources that can then be fed into a single repository within the semantic layer. This streamlines information management processes for enhanced data access, connectivity, findability, and discoverability.

EK leverages AI-based categorization to enable our clients to consistently organize data based on defined access and security requirements applicable within their organizations. For example, a leading federal research organization struggled with managing large amounts of unstructured data across various platforms, resulting in inefficiencies in data access and an increased risk of sensitive data exposure. EK automated content categorization based on predefined sensitivity rules and built a dashboard powered by the semantic layer to streamline the identification and remediation of access issues for overshared sensitive content. With the initial proof of concept, EK successfully automated the scanning and analyzing of about 30,000 documents to identify disparities in sensitivity labels and access designations without burdensome manual efforts.

Conclusion

AI techniques can be used to facilitate data aggregation, standardization, and semantic enrichment for curated input into the semantic layer, as well as to build upon the semantic layer for advanced downstream applications from semantic search to recommendation engines. By harnessing the combined power of AI and semantic layer components, organizations can accelerate the digital transformation of their knowledge and data landscapes and establish truly data-driven processes across the enterprise. Contact EK to see if your organization is ready for AI and learn how you can get started with your own AI-driven semantic layer initiatives.