Enterprise Knowledge Graph Governance Best Practices

Successfully building, implementing, and scaling an enterprise knowledge graph is a serious undertaking. Those who have been successful at it would emphasize that it takes a clear definition of need (use cases), an appetite to start small, and a few iterations to get it right. When done right, a knowledge graph provides valuable business outcomes, including a scalable organizational flexibility to enrich your data and information with institutional knowledge while aggregating content from numerous sources to enable your systems’ understanding of the context and the evolving nature of your business domain.

Having worked on multiple knowledge graph implementation projects, the most common question I get is, “what does it take for an organization to maintain and update an enterprise knowledge graph?” Though many organizations have been successfully building knowledge graph pilots and prototypes that adequately demonstrate the potential of the technology, few have successfully deployed an enterprise knowledge graph that proves out the true business value and ROI this technology offers. Such forethought about governance from the get-go plays a key role in ensuring that the upfront investment in a tangible solution remains a long-term success. Here, I’ll share the key considerations and the approaches we have found effective when it comes to instituting successful approaches to grow and manage an enterprise knowledge graph to ensure it continues serving the upstream and downstream applications that rely on it.

First and foremost, building an effective knowledge graph begins with understanding and defining clear use cases and the business problems that it will be solving for your organization. Starting here will enable you to anticipate and tackle questions like:

“Who will be the primary end-users or subject matter experts?”

“What type of data do you need?”

“What data or systems will it be applied to?”

“How often does your data change?”

“Who will be updating and maintaining it?”

Addressing these questions early on will not only allow you to shape your development and implementation scope, but also define a repeatable process for managing change and future efforts. The section below provides specific areas of consideration when getting started.

1. Build it Right – Use Standards

As a natural integration framework, an enterprise knowledge graph is part of an architectural layer that consists of a wide array of solutions, ranging from the organizational data itself, to data models that support object or context oriented information models (taxonomy, ontology, and a knowledge graph), and user facing applications that allow you to interact with data and information directly (search, analytics dashboards, chatbots, etc). Thus, properly understanding and designing the architecture is one of the most fundamental aspects for making sure it doesn’t become stale or irrelevant.

A practical knowledge graph needs to leverage common semantic information organization models such as metadata schemas, taxonomies, and ontologies. These serve as data models or schemas by representing your content in systems and placing constraints for what types of business entities are connected to a graph and related to one another. Building a knowledge graph through these layers that serve as “blueprints” of your business processes helps maintain the identity and structure for your knowledge graph to continue growing and evolving through time. A knowledge graph built on these logical models that are explicitly defined makes your business logic machine readable and allows for the understanding of the context and relationships of your data and your business entities. Using these unifying data models also enables you to integrate data in different formats (for example, unstructured PDF documents, relational databases, and structured text formats like XML and JSON), rendering your enterprise data interconnected and reusable across disparate and diverse technologies such as Content Management Systems (CMS) or Customer Management Systems (CRM).

When building these information models (taxonomies and ontologies), leveraging semantic web standards such as the Resource Description Framework (RDF), the Simple Knowledge Organization System (SKOS), and the Web Ontology Language (OWL), offer many long term benefits by facilitating governance, interoperability, and scale. Specifically, leveraging these well-established standards when developing your knowledge graph allows you to:

- Represent and transfer information across multiples systems, solutions, or types of data/content and avoid vendor lock to proprietary solutions;

- Share your content internally across the organization or externally with other organizations;

- Support and integrate with publicly available taxonomies, ontologies, and linked open data sources to jump start your enterprise semantic models or to enrich your existing information architecture with industry standards; and

- Enable your systems to understand business vocabulary and design for its evolution.

2. Understand the Frequency of Change and the Volume of Your Data

A viable knowledge graph solution is closely linked to the business model and domain of the organization, which means it should always be relevant, up to date, accurate, and have a scalable coverage of all valuable sources of information. Frequent changes to your data model or knowledge graph means your organization’s domain is in constant shift and needs your knowledge and information to constantly keep up.

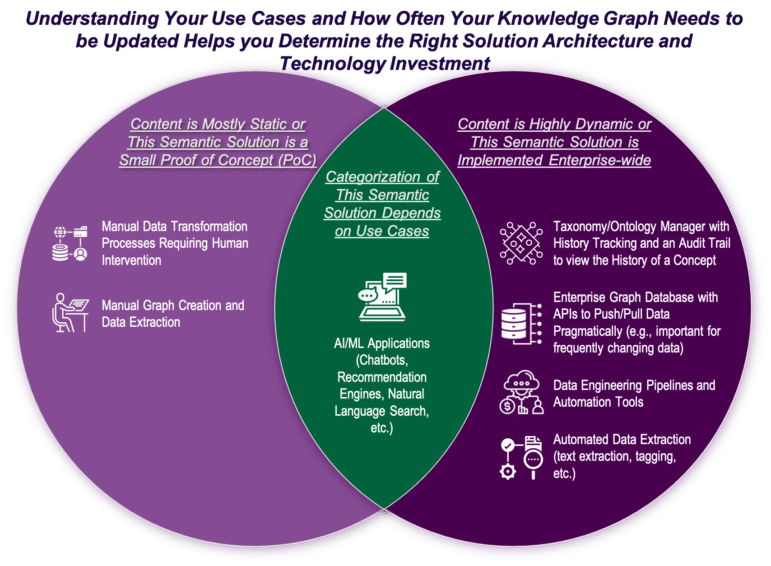

These types of changes should not require the rebuilding or restructuring of your entire graph. As such, depending on your industry and use cases, determining the frequency and update intervals as well as your governance model is a good way to effectively govern your enterprise knowledge graph.

For instance, for our clients in the accounting or tax domain, industry and organizational vocabulary/metadata and their underlying processes/content are relatively static. Therefore the knowledge, entities, and processes in their business domain don’t typically change that frequently. This means real-time updates and editing of their knowledge graph solution at a scale may not be a primary need or capability that needs focus right away. Such use cases allow these organizations to realize savings by shifting the focus from enterprise level metadata management tools or large scale data engineering solutions to effectively defining their data model and governance to address the immediate use cases or business requirements at hand.

In other scenarios for our clients in the digital marketing and analytics industry, obtaining a 360-view of a consumer in real-time is their bread and butter. This means that marketing and analytics teams need to immediately know when, for example, a “marketable consumer” changes their address or contact information. It is imperative in this case that such rapidly changing business domains have the resources, capabilities, and automation necessary to update and govern their knowledge graphs at scale.

3. Develop Programmatic Access Points to Connect Your Applications:

Common enterprise knowledge graph solutions are constructed through data transformation pipelines. This renders a repeatable process for the mapping of structured sources and the extraction, disambiguation, classification, and tagging of unstructured sources. It also means that the main way to affect the data in the knowledge graph is to govern the input data (e.g. exports from taxonomy management systems, content management platforms, database systems, etc.). Otherwise, ad-hoc changes to the knowledge graph will be lost or erased every time new data is loaded from a connected application.

Therefore, designing and implementing a repeatable data extraction and application model that is guided by the governance of the source systems is one of the fundamental architectures to build a reliable knowledge graph.

4. Put validation checks and analytics processes in place

Apply checks to identify conflicting information within your knowledge graph. Even though it’s rather challenging to train a knowledge graph to automatically know the right way to organize new knowledge and information, the ability to track and check why certain attributes and values were applied to your data or content should be part of the design for all data that is aggregated in the solution. One technique we’ve used is to segment inferred or predicted data into a separate graph reserved for new and uncertain information. In this way, uncertain data can be isolated from observed or confirmed information, making it easier to trace the origins of inferred information, or to recompute inferences and predictions as your underlying data or artificial intelligence models change. Confidence scores or ratings in both entities and relationships can also be used to indicate graph accuracy. Additional effective practices that provide checks and processes for creating and updating a knowledge graph include instituting consistent naming conventions throughout the design and implementation (e.g., URIs) and establishing guidelines for version control and workflows, including a log of all changes and edits to the graph. Many enterprise knowledge graphs also support the SHACL Semantic Web standard, which can be used to validate your graph when adding new data and check for logical inconsistencies.

5. Develop a Governance Plan and Operating Model

An effective knowledge graph governance model addresses the common set of standards and processes to handle changes and requests to the knowledge graph and peripheral systems at all levels. Specifically, a good knowledge graph governance model will provide an approach or specification for the following:

- Governance roles and responsibilities. Common governance roles include a governance group of taxonomists/ontologists, data engineers or scientists, database and application managers and administrators, and knowledge or business representatives or analysts;

- Governance around data sources that feed the knowledge graph. For instance when there’s unclean data coming in from a source system, specific roles and processes for correcting this data;

- Specific processes for updating the knowledge graph in the system it is managed (i.e., processes to ensure major and minor changes to the knowledge graph are accurately assessed and implemented). Including governance around adding new data sources — what does it look like, who needs to be involved, etc.;

- Approaches to handle changes to the underlying ontology data model. Common change requests include addition, modification or depreciation of an ontological class, attributes, synonyms or relationships;

- Approaches to tackling common barriers to continue building and enhancing a successful ontology and knowledge graph. Common challenges include lack of effective text analytics and extraction tools to automate the organization of content and application of tags/relationships, and intuitive management and updates to Linked Data;

- Guidance on communication to stakeholders and end users including sample messaging and communication best practices and methods; and

- Review cadence. Identify common intervals for changes and adjustments to the knowledge graph solution by understanding the complexity and fluidity of your data and build in recurring review cycles and governance meetings accordingly

Closing

As a representation of an organization’s knowledge, an enterprise knowledge graph allows for aggregation of a breadth of information across systems and departments. If left with no ownership and plan, it can easily grow out of sync and result in rework, redesign and a lot of wasted effort.

Whether you are just beginning to design an enterprise knowledge graph and wish to understand the value and benefits, or you are looking for a proven approach for defining governance, maintenance, and plan to scale, check out our additional thought leadership and real world case studies to learn more. Our expert graph engineers and consultants are also on standby if you need any support. Contact us with any questions.

| Get Started | Ask Us a Question |