In today’s world, the capabilities of artificial intelligence (AI) and large language models (LLMs) have generated widespread excitement. Recent advancements have made natural language use cases, like chatbots and semantic search, more feasible for organizations. However, many people don’t understand the significant role that ontologies play alongside AI and LLMs. People often ask: do LLMs replace ontologies or complement them? Are ontologies becoming obsolete, or are they still relevant in this rapidly evolving field?

In this blog, I will explain the continuing importance of ontologies in your organization’s quest for better knowledge retrieval and in augmenting the capabilities of LLMs.

Defining Ontologies and LLMs

Let’s start with quick definitions to ensure we have the same background information.

What is an Ontology

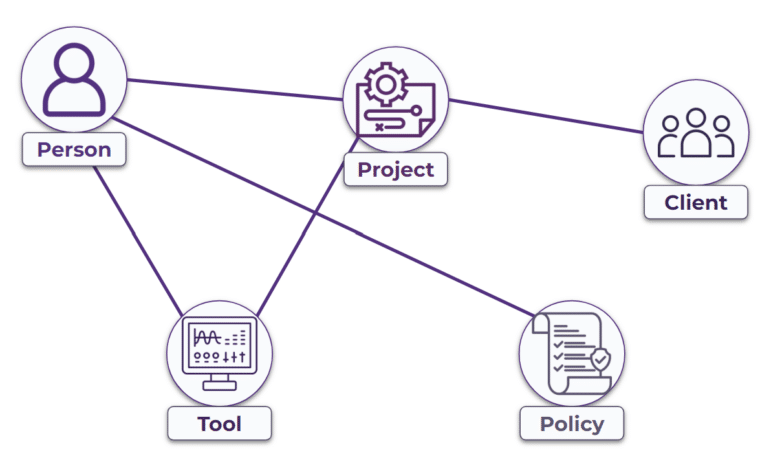

An ontology is a data model that describes a knowledge domain, typically within an organization or particular subject area, and provides context for how different entities are related. For example, an ontology for Enterprise Knowledge could include the following entity types:

- Clients

- People

- Policies

- Projects

- Experts

- Tools

The ontology includes properties about each type, i.e., people’s names and projects’ start and end dates. Additionally, the ontology contains the relationships between types, such as people work on projects, people are experts in tools, and projects are with clients.

Ontologies define the model often used in a knowledge graph, the database of real-world things and their connections. For instance, the ontology describes types like people, projects, and client types, and the corresponding knowledge graph would contain actual data, such as information about James Midkiff (Person), who worked on semantic search (Project) for a multinational development bank (Client).

What is an LLM

An LLM is a model trained to understand human sentence structure and meaning. The model can understand text inputs and generate outputs that adhere to correct grammar and language. To briefly describe how an LLM works, LLMs represent text as vectors, known as embeddings. Embeddings act like a numerical fingerprint, uniquely representing each piece of text. The LLM can mathematically compare embeddings of the training set with embeddings from the input text and find similarities to piece together an answer. For example, an LLM can be provided with a large document and asked to summarize it. Since the model can understand the meaning of the large document, transforming it into embeddings, it can easily compile an answer from the provided text.

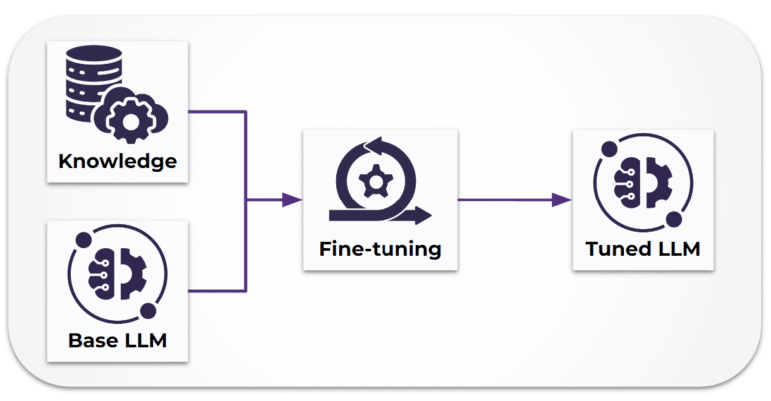

Organizations can take advantage of open-source LLMs like Llama2, BLOOM, and BERT, as developing and training custom LLMs can be prohibitively expensive. While utilizing these models, organizations can fine-tune (extend) them with domain-specific information to help the LLM understand the nuances of a particular field. The tuning process is much less expensive to perform and can improve the accuracy of a model’s output.

Integrating Ontologies and LLMs

When an organization begins to utilize LLMs, several common concerns emerge:

- Hallucinations: LLMs are prone to hallucinate, returning incorrect results based on incomplete or outdated training data or by making statistically-based best guesses.

- Knowledge Limitation: Out of the box, LLMs can only answer questions from their training set and the provided input text.

- Unclear Traceability: LLMs return answers based on their training data and statistics, and it is often unclear if the provided answer is a fact pulled from input training data or if it is a guess.

These concerns are all addressed by providing LLMs with methods to integrate information from an organization’s knowledge domain.

Fine-tuning with a Knowledge Graph

Ontologies model the facts within an organization’s knowledge domain, while a knowledge graph populates these models with actual, factual values. We can leverage these facts to customize and fine-tune the language model to align with the organization’s manner of describing and interconnecting information. This fine-tuning enables the LLM to answer domain-specific questions, accurately identify named entities relevant to the field, and generate language using the organization’s vocabulary.

Training an LLM with factual information presents challenges similar to those encountered with the original LLM: The training data can become outdated, leading to incomplete or inaccurate responses. To address this, fine-tuning an LLM should be considered a continuous process. Regularly updating the LLM with new and existing relevant information is necessary to maintain up-to-date language usage and factual accuracy. Additionally, it’s essential to diversify the training material fed into the LLM to provide a sample of content in various forms. This involves combining ontology-based facts with varied content and data from the organization’s domain, creating a training set to ensure the LLM is balanced and unbiased toward any specific dataset.

Retrieval Augmented Generation

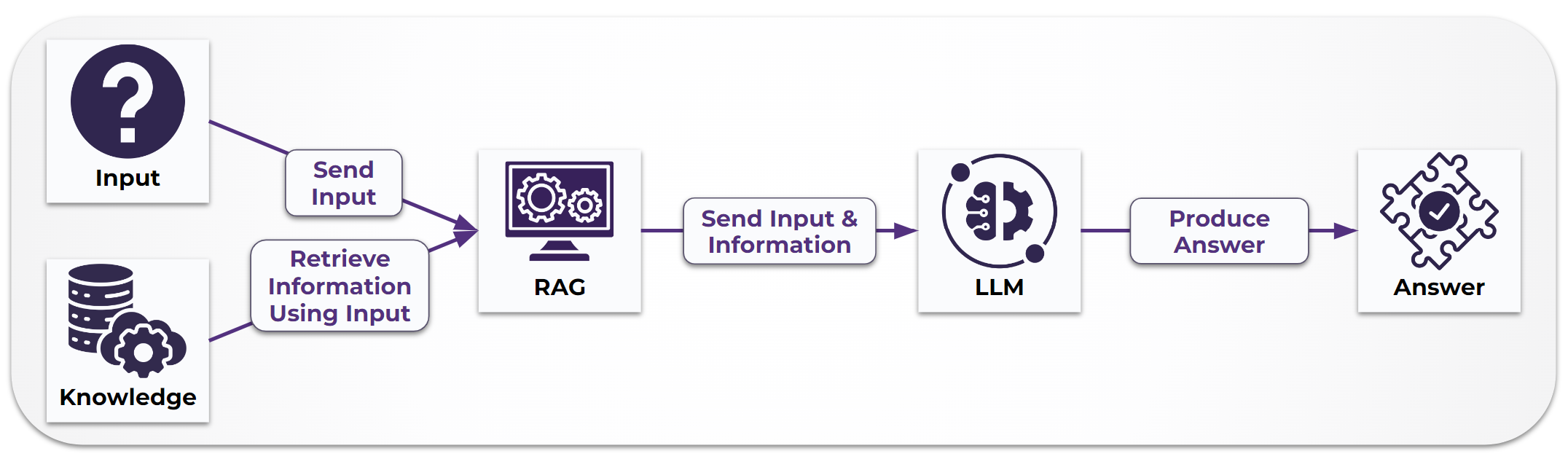

The primary method used to avoid stale or incomplete LLM responses is Retrieval Augmented Generation (RAG). RAG is a process that augments the input fed into an LLM with relevant information from an organization’s knowledge domain. Using RAG, an LLM can access information beyond its original training set, utilizing this information to produce more accurate answers. RAG can draw from diverse data sources, including databases, search engines (semantic or vector search), and APIs. An additional benefit of RAG is its ability to provide references for the sources used to generate responses.

We aim to leverage the ontology and knowledge graph to extract facts relevant to the LLM’s input, thereby enhancing the quality of the LLM’s responses. By providing these facts as inputs, the LLM can explicitly understand the relationships within the domain rather than discerning them statistically. Furthermore, feeding the LLM with specific numerical data and other relevant information increases the LLM’s ability to respond to complex queries, including those involving calculations or relating multiple pieces of information. With accurately tailored inputs, the LLM will provide validated, actionable insights rooted in the organization’s data.

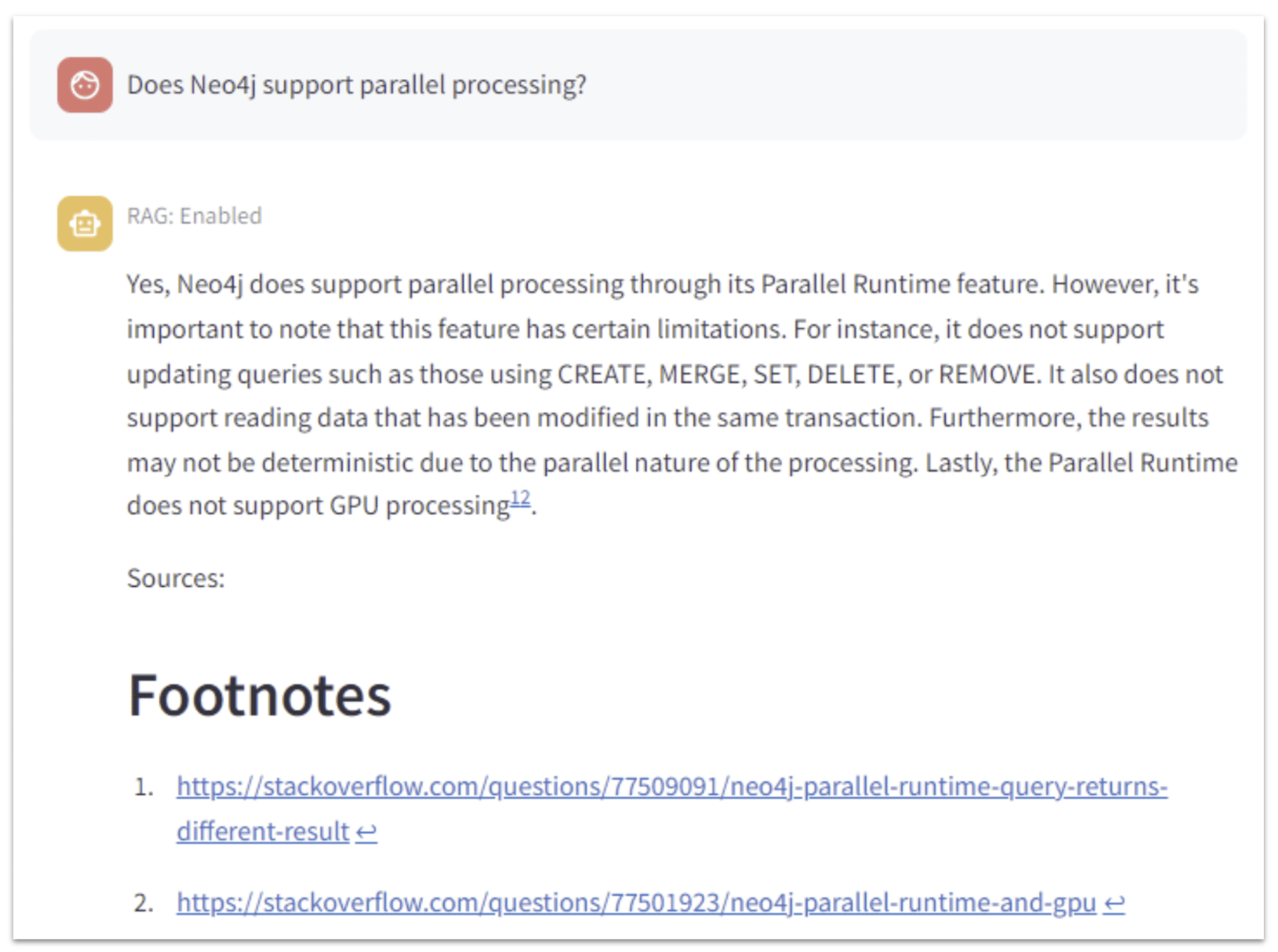

For an example of RAG in action, see the LLM input and response below using a GenAI stack with Neo4j.

Conclusion

LLMs are an exciting tool that enable us to effectively interpret and utilize an organization’s knowledge, and quickly access valuable answers and insights. Integrating ontologies and their corresponding knowledge graphs ensures that the LLM accurately uses the language and factual content of an organization’s knowledge domain when generating responses. Are you interested in leveraging your organization’s knowledge with an LLM? Contact us for more information on how we can get started.