Earlier this year, I shared the top trends we are seeing when it comes to solving enterprise data management problems. We continue to deploy and put these emerging solutions to the test (read more on that from our case studies) as we work with organizations to push for digital transformation. The volume and diversity of information continues its expansion and the age-old challenges of siloed and unreliable data continue to pose a threat to getting returns from these large data transformation investments.

This is causing many organizations to revisit their data strategies, taking a deliberate and objective look at their business through the lens of data, anticipating ongoing disruptions, and building in the agility required to support their data teams.

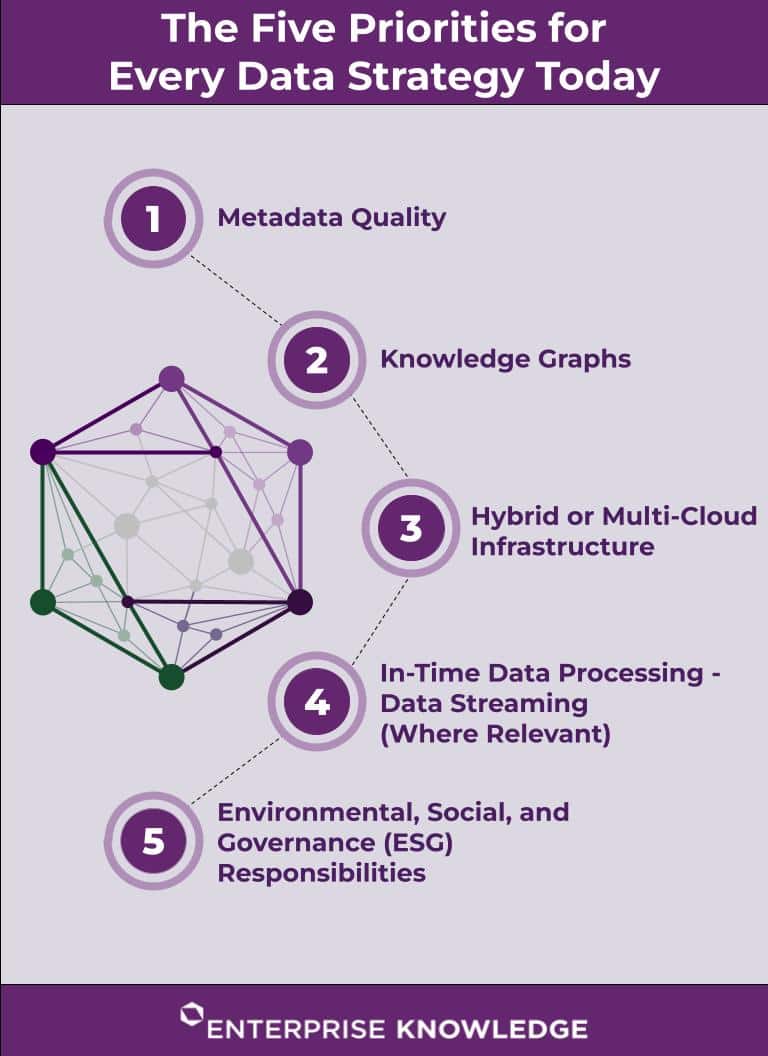

These are the common components I am seeing consistently arise as key ingredients of today’s most successful enterprise data strategies – especially critical to the success of organizations that are undergoing a digital or data transformation, cloud migration, or deploying new business offerings.

Metadata Quality

Quality data enables the findability, discoverability, reusability, traceability, and the ease of governance of data to ultimately connect people with the knowledge, content, and individuals they need to take actions or make decisions. And good quality data begins with metadata.

The complexities related to data quality and big data governance have primarily increased due to the silo between types of data – structured and unstructured. Metadata bridges this gap with its essential and expanded role of ensuring that data can be found, used, secured, and reused by the appropriate parties.

We define quality metadata as having the following characteristics of the “four Cs” — quality metadata is:

- Complete: has full coverage of the data/content it describes to accurately define, categorize, and label.

- Correct: metadata fields and values appropriately and consistently describe data

- Contextualized: provides meaningful information to describe and connect business knowledge

- Connected: metadata is continuously analyzed and connected through a graph to understand unique and business-focused relationships.

As a result, the modern data stack needs an approach to intuitively deliver and connect metadata to handle the dynamism and size of big data. A starting point for that is by having a strategy for cataloging and understanding enterprise data and leveraging active metadata as a fabric or abstraction layer to connect data without the need to move the physical location of the underlying data (a data fabric architecture). An enterprise data catalog is one foundational component of the solution and the pillar for a forward looking data strategy. Organizations with the need and use cases for higher flexibility and quality of aggregation of contextual metadata are bringing in data catalogs powered by graphs to properly integrate knowledge and data across the organization whereas traditional data catalogs are lacking flexibility to support new types of data sources as data continues to grow.

Knowledge Graphs

A knowledge graph, a model for applying business context (semantics) and capturing relationships between your data and content, allows organizations to create a machine-readable version of their collective institutional knowledge. Knowledge graphs serve as an abstraction layer that aggregates data that is heavily dependent on context, connectivity and relationships – in the way people think about and describe data – even if it is siloed and in diverse formats. There are a few ways a knowledge graph helps support digital transformation and data management capabilities:

- Applying organizational context and knowledge to data;

- Providing the link across siloed and diverse data and content through federated and virtualized access to data and a persistent graph database storage that stores detailed metadata and relationships together with data;

- Delivering explainable AI solutions by providing machine learning (ML) with the context, knowledge, and understanding it needs to deliver trustworthy and predictable recommendations; and

- Optimizing data governance throughout the lifecycle by serving as a flexible architecture to provide the flexibility to model changes in data over time and thus help with data lineage, data mapping and data quality through the analysis of network effects.

Many organizations that we know to be successful at delivering advanced user experiences and are ahead in their AI journeys are those that have adopted a lean data management and governance strategy that derives meaning and value out of data for the enterprise and uses knowledge graph solutions to connect, organize, and standardize their data.

Hybrid or Multi-Cloud Infrastructure

We are seeing a rapid shift to cloud infrastructures for business and data processing. Over 80% of the organizations we are currently working with have some form of cloud migration or engineering efforts taking place.

As organizations accelerate their digital modernization and transformation efforts, it is becoming unrealistic to view the end-to-end data lifecycle without the power computing infrastructure of the cloud. However, while businesses are making great investments in setting up their cloud infrastructures, most are lacking a well-rounded Cloud Strategy for Data Management and concerns remain around privacy and security, performance, potential data loss, and the risk of unexpected costs due to the pay-as-you-go, usage-based consumption model.

Leading companies are developing actionable strategies around alignment between infrastructure and data teams, cloud data architecture and the data replication processes as part of their forward looking data strategies. Cloud has quickly proven to be a competitive advantage in order to scale data processing and Enterprise AI and machine learning (ML) operations and capabilities.

One of our clients in the financial services sector serves as a great model for this. They developed their data strategy with cloud in mind nearly a decade ago. Today, they have adopted a data mesh model, treating data as a product and leveraging cloud solutions and semantic standards to facilitate a federated data governance model. They are now bringing to market some of the internal products and platforms they developed to adopt cloud-based data solutions in a well-managed way to help other companies navigate the cloud journey.

In-Time Data Processing – Data Streaming (Where Relevant)

Adopting a data streaming architecture is becoming an important aspect of data management strategy and implementation due to the increasing volume and scale of data organizations are dealing with as a result of digitization.

Data platforms need to process data as it streams into the organization to make time-sensitive decisions. Stream processing of data allows organizations to do just that by allowing for the processing of individual records or micro batches of data consisting of a few records in real time as opposed to the default processing of large batches of data. Familiar examples of streaming data include the just-in-time updates we get when a product is in stock at a store or online, real-time stock trades, system log monitoring, and digital twins, to name just a few.

In the past, we have been limited by a smaller number of data sources and technologies that were available to us that were forcing batch ingestion, processing, and structuring of data before it can be acted upon. Today, many organizations are starting to operationalize a hybrid model as part of their data strategy and architecture to consume, store, enrich, and analyze data that is in motion. This is further allowing organizations to define independent services to deliver insights to specific use cases and stakeholders; and provide a natural design for decoupling integrated legacy systems which in turn breaks up data management and governance efforts to help build out data processing capabilities incrementally.

Environmental, Social, and Governance (ESG) Responsibilities

Environmental, social, and governance (ESG) responsibility has gone beyond a business goal to becoming an emerging topic in data management and governance in the past decade. Ensuring that data operations, services, and approaches support or meet ESG responsibilities, including corporate social responsibility, diversity and inclusion, and other environmental and social topics has become an expectation for enterprises today.

Specifically, ESG impacts enterprise data strategy in two ways;

- Data strategy needs to identify relevant ESG frameworks and topics to collect, organize, and analyze all the necessary business data for tracking and reporting on ESG performance.

- Data strategy should define a framework for data management activities and operations to be run in the most sustainable and efficient manner – think of the environmental impact of processing crypto data or data obtained unethically.

We are seeing this first hand by the increasing number of projects we are supporting to help our clients either aggregate and manage ESG related data to track their performance reporting or model their ESG related data and analytics processes to make business decisions, such as defining efficient route planning algorithms to improve their supply chain footprints. Further, we are leveraging knowledge graphs to help untangle complicated networks that can otherwise obscure the ESG impacts of business processes.

The business value? In addition to creating a healthier planet for all of us, adhering to ESG principles has proven to lower the cost of capital and improve operational performance. Thus, businesses have started using favorable ESG ratings to create a stronger case for investments against companies with lower ESG performance.

The lack of a single, mandatory ESG standard provides a challenge when it comes to embedding ESG principles within enterprise data strategies. However, there are commonly used ESG frameworks that enable data organizations to embed ESG within larger data management and governance efforts. Reporting standards or frameworks like the Global Reporting Initiative (GRI), Task Force on Climate-related Financial Disclosures (TCFD), and SASB Standards are the most common to consider. Developing a strategy, policies, and procedures that promote commitment to responsible business (CSR, Sustainability, Ethics) should be top of mind for organizational data today.

The underlying tenets of an Enterprise Data Strategy need to align data with overall business, people, process, and technology goals. The bottom line is that data-driven enterprises, Chief Data Officers (CDOs), and business executives are in a race to be able to make and show progress to capture the highest value from their data efforts. Having an understanding of the characteristics of these five data management staples and disruptions and developing an actionable plan to execute on the capabilities they enable will be the factor that will separate organizations of today from the success stories of tomorrow.